# Customization

You can customize the experience (interaction flow and contents) with Spoon character by configuring on the specific files, on the device, that are linked with your release.

All these files are located in the Conf folder in your release folder (by default it will be at C:/Program Files/SPooN/developers-1.6.0/release\Conf).

# Main configuration file for customization : release.conf

This file centralizes all the elements to customize the experience with your release. It has the JSON format.

{

"profile": <string>,

"character": <string>,

"audio": <AudioSystemConfiguration>

"language": <LanguageEnum>,

"availableLanguages": [

<LanguageEnum>,

...

<LanguageEnum>

],

"databaseName": <string>,

"interactionFlow": <InteractionFlowConfiguration>,

"specificContents": [

<string>,

...

<string>

],

"specificDictationConstraints": [

<string>,

...

<string>

],

"specificDictationResultsCorrections": [

<string>,

...

<string>

],

"specificGrammarPronunciations": [

<string>,

...

<string>

],

"specificPronunciations": [

<string>,

...

<string>

],

"engagementZonesConfiguration": {

"inSocialZoneDistance": <float>,

"outSocialZoneDistance": <float>,

"inPersonalZoneDistance": <float>,

"outPersonalZoneDistance": <float>

},

"blobDetectionZone":

{

"vertices": [

[<float>, <float>],

...

[<float>, <float>]

],

"floorHeightThreshold": <float>

},

"backgroundImage": <string>,

"overrideShowCursor": <bool>

}

# "profile"

- description: corresponds to a specific configuration mode of the SDK

- type: string

WARNING

DO NOT MODIFY NOR REMOVE, for internal use for the moment, will be deleted soon.

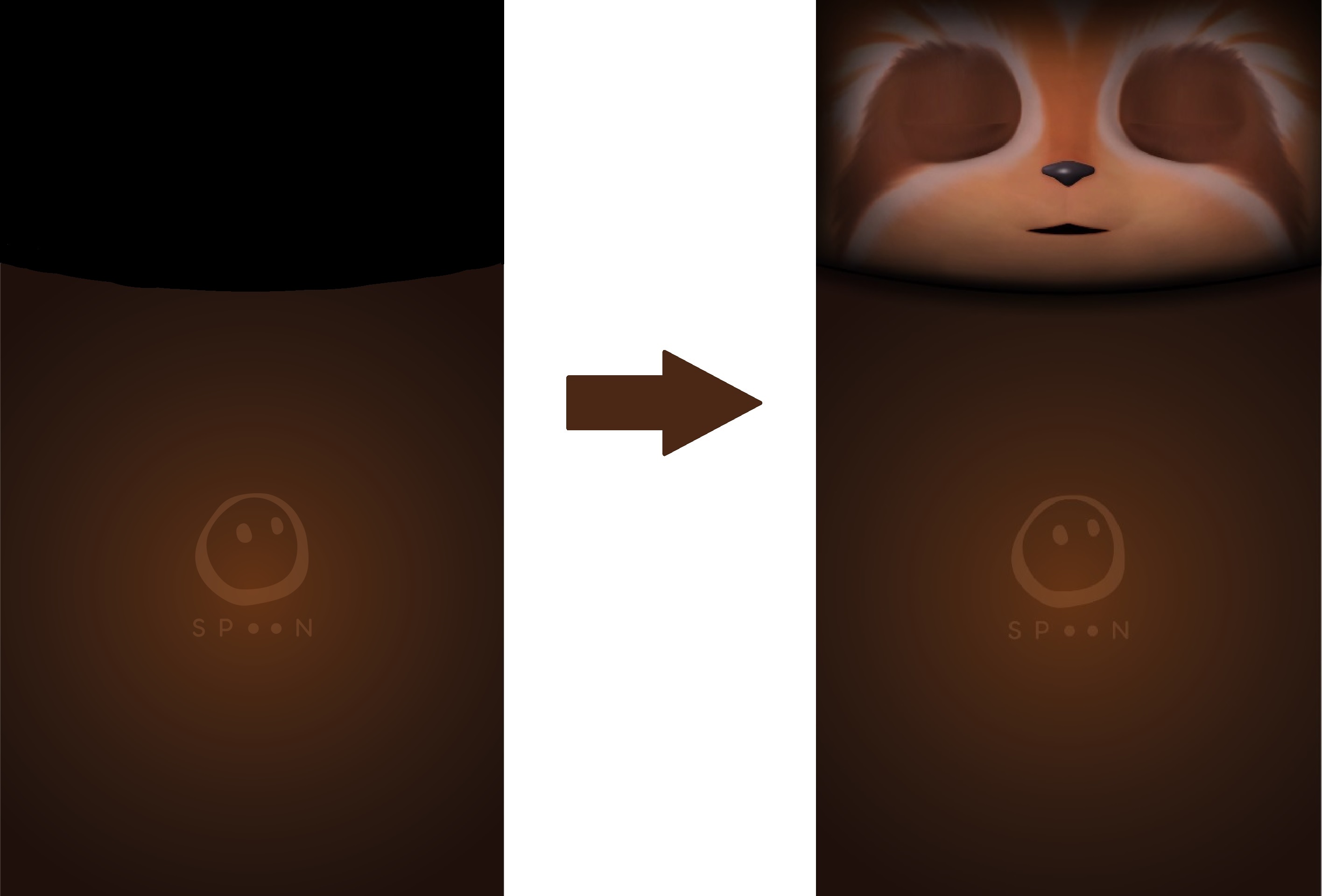

# "character"

- description: selection of the character that will be displayed at start, see details about character selection here

- type: string

- available characters: "Spoony", "Fogg", "Moon" (Spoony by default)

# Example:

"character": "Spoony"

# "audio"

You can set the default volume as well as the min and max volume that will be settable via the API.

"minVolume": 0.0,

"maxVolume": 1.0,

"currentVolume": 1.0

- minVolume: the minimum volume that will be accessible via the API (value between 0 and 1, defaults to 0)

- maxVolume: the maximum volume that will be accessible via the API (value between 0 and 1, defaults to 1)

- currentVolume: the volume that will be used when starting SPooN (value between minVolume and maxVolume, defaults to 1)

# "language"

- description: default language of the character at start

- type: LanguageEnum

- possible values (if content is available for the language)

- "en_US" (English)

- "fr_FR" (French)

- "ja_JP" (Japanese)

- "zh_CN" (Chinese)

# Example:

"language": "fr_FR"

# "availableLanguages"

- description: list of the available languages, in order to allow to change language for the interaction

- type: List<LanguageEnum>

- possible values (if content is available for the language)

- "en_US" (English)

- "fr_FR" (French)

- "ja_JP" (Japanese)

- "zh_CN" (Chinese)

# Example:

"availableLanguages": [

"en_US",

"fr_FR"

]

# "databaseName"

- description: name of local database for persistent data storage

- type: string

WARNING

DO NOT MODIFY NOR REMOVE, for internal use for the moment, will be deleted soon.

# "interactionFlow"

- description: customization of the interaction flow

- type: InteractionFlowConfiguration

- content: see details about interaction flow parameters here

# "specificContents"

- description: list of SpecificContent files to be linked (see presentation of usage of SpecificContents)

- type: List<string>

- values: paths of the content files, relative to the Conf folder

# Example:

"specificContent": [

"specificContent.csv",

"Folder/specificContentInFolder.csv"

]

# "specificDictationConstraints"

- description: list of SpecificDictationConstraints files to be linked (see presentation of usage of SpecificDictationConstraints)

- type: List<string>

- values: paths of the content files, relative to the Conf folder

# Example:

"specificDictationConstraints": [

"specificDictationConstraint.csv",

"Folder/specificDictationConstraintInFolder.csv"

]

# "specificGrammarPronunciations"

- description: list of SpecificGrammarPronunciations files to be linked (see presentation of usage of SpecificGrammarPronunciations)

- type: List<string>

- values: paths of the content files, relative to the Conf folder

# Example:

"specificGrammarPronunciations": [

"specificGrammarPronunciation.csv",

"Folder/specificGrammarPronunciationInFolder.csv"

]

# "specificPronunciations"

- description: list of SpecificPronunciations files to be linked (see presentation of usage of SpecificPronunciations)

- type: List<string>

- values: paths of the content files, relative to the Conf folder

# Example:

"specificPronunciations": [

"specificPronunciation.csv",

"Folder/specificPronunciationInFolder.csv"

]

# "keyboardCustomEmailAddresses"

- description: list of email suffixes that will be added to the displayed keyboards for quick access

- type: List<string>

- limit: 4 values max

# Format:

"keyboardCustomEmailAddresses":

[

"@address1",

"@address2",

...

]

# Example:

"keyboardCustomEmailAddresses":

[

"@spoon.ai",

"@spoon-cloud.com"

]

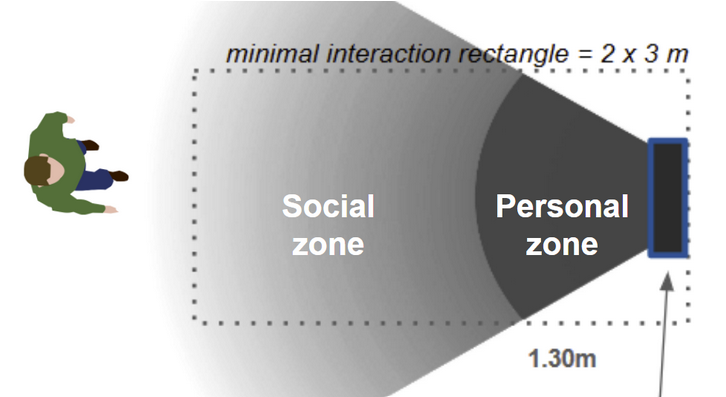

# "engagementZonesConfiguration"

- description: distances of the different interaction zones (for entry and exit)

- type: EngagementZonesConfiguration

# Format:

"engagementZonesConfiguration":

{

"inSocialZoneDistance": float,

"outSocialZoneDistance": float,

"inPersonalZoneDistance": float,

"outPersonalZoneDistance": float

}

- inSocialZoneDistance: distance (in meters) to enter the Social Zone

- outSocialZoneDistance: distance (in meters) to exit the Social Zone

- value must be greater than inSocialZoneDistance to avoid an oscillation for a person that would be detected at a distance close to inSocialZoneDistance (as they might be detected in and out of the zone in an infinite loop)

- inPersonnalZoneDistance: distance (in meters) to enter the Personal Zone

- outPersonalDistance: distance (in meters) to exit the Personal Zone

- value must be greater than inPersonnalZoneDistance to avoid an oscillation for a person that would be detected at a distance close to inPersonnalZoneDistance (as they might be detected in and out of the zone in an infinite loop)

# Example:

"engagementZonesConfiguration": {

"inSocialZoneDistance": 4.0,

"outSocialZoneDistance": 4.5,

"inPersonalZoneDistance": 1.3,

"outPersonalZoneDistance": 1.8

}

# "blobDetectionZone"

- description: zone of detection of objects as users by the 3D camera

- usage: all the elements detected in the zone defined in this field will be detected as users. This zone should be set in a restrictive manner to make sure so that it cannot include immobile objects (walls, furniture, etc...)

- type: BlobDetectionZoneConfiguration

# Format:

"blobDetectionZone":

{

"vertices": [[floatX, floatY], … , [floatX, floatY]],

"floorHeightThreshold": <float>

},

- vertices: list of 2D points on the floor, corresponding to the contour of the detection zone

- position [X, Y] is defined in meters, with respect to the center of the device on the floor

- X-axis is parallel to the device, oriented to the left

- Y-axis is perpendicular to the device, oriented forward

- floorHeightThreshold: height (in meters) relative to the floor, under which detected elements will be considered as part of the floor and not detected as users

# Example:

"blobDetectionZone":

{

"vertices": [[0.75, 0], [0.75, 1.5], [-0.75, 1.5], [-0.75, 0]],

"floorHeightThreshold": 0.3

},

# "backgroundImage"

- description: name of the file used for the background. Only available on vertical configuration. Optional.

- usage: file to be put in the "Conf" folder of the release. Recommended resolution: 1080 * 1920 (or at least 9:16 ratio).

- type: String

WARNING

The upper part of the background will be hidden.

# Example

"backgroundImage": "wallpaper.png",

# "overrideShowCursor"

- description: allows to show the cursor of the computer mouse, when screen is not tactile

- type: boolean

- ossible values:

- true: mouse cursor is visible (to be used on laptop and other non tactile screens)

- false: mouse cursor is not visible (to be used on tactile screens)

# Character Selection

You can choose the character with which the users will interact. There are three characters available:

Spoony

Fogg

Moon

Character name usage: in all contents, you can use the element "{CHARACTER_NAME}". It will be replaced at runtime with the name of the character displayed on the screen.

To choose the character that will be displayed at start, use the "character" field of the configuration in the release.conf file.

# Interaction flows

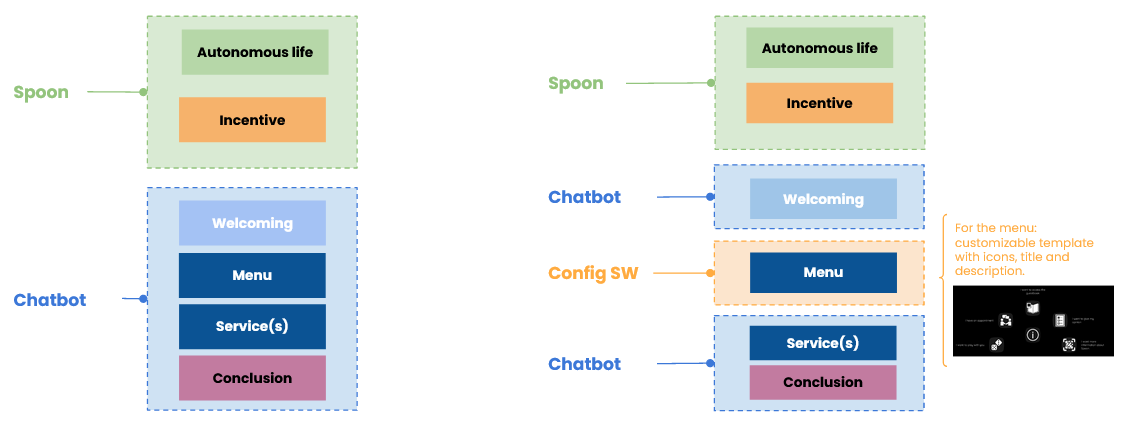

The two different interaction flows (linear and menu-based) are explained in the concepts section.

You have two ways to customize the interaction flow:

- Full custom flow: You have total freedom to implement the flow you want as your Dialogflow bot is started at the beginning of the interaction and runs till the conclusion. You can build both types of flows: linear and menu-based, depending on the content of your chatbot.

- Flow based on Spoon Service Launcher: This solution reuses SPooN's menu-based flow. You can customize the different steps of the flow and the different services linked in the menu by changing the default sentences or by overwriting them using chatbots.

# Interaction flow customization

To customize the flow, fill the "interactionFlow" field of the configuration in the release.conf file.

"interactionFlow":

{

"type": <InteractionFlowTypeEnum>,

"incitations": <IncitationsScenarioConfiguration>,

"welcoming": <WelcomingScenarioConfiguration>,

"fullScreenImages": <FullScreenImageConfiguration>,

"main": <MainScenarioConfiguration>,

"launcher": <LauncherScenarioConfiguration>,

"services": [

<ServiceConfiguration>,

...

<ServiceConfiguration>

],

"conclusion": <ConclusionScenarioConfiguration>

}

- type: choose interaction flow type

- type = Enum

- Custom: full custom flow

- WithLauncher: flow using Spoon's service Launcher

- type = Enum

- incitations: definition of Incitations scenario

- welcoming: definition of Welcoming scenario

- fullScreenImages: definition of fullscreen images appearing during welcoming and/or incitations

- main: definition of main scenario (if type = Custom)

- type = MainScenarioConfiguration

- launcher: definition of Launcher scenario (if type = WithLauncher)

- services : list of the loaded services

- type = List<ServiceConfiguration>

- conclusion" : definition of Conclusion scenario (only if type = WithLauncher)

# Interaction flow configuration examples

# Custom Interaction Flow:

"interactionFlow":

{

"type": "Custom",

"main":

{

"chatbotConfiguration":

{

"engineName": "DialogFlow",

"connectionConfiguration":

{

"serviceAccountFileName": "mybot.json",

"platformName": ""

}

}

}

}

# Interaction Flow WithLauncher, using default scenarios:

"interactionFlow":

{

"type": "WithLauncher",

"welcoming":

{

"welcomingSentences": [

{ "en_US": "Welcome my friend!" },

{ "en_US": "Hello there" }

]

},

"launcher":

{

"introduction": [

{ "en_US": "What do you want to do?" },

{ "en_US": "What can I do for you?" }

],

"explanationValidation": [

{ "en_US": "Do you want me to show you my services?"}

],

"explanationIntroduction": [

{ "en_US": "Here are my services" }

],

"explanationFinalTransition": [

{ "en_US": "and finally" }

],

"doSomethingElseValidation": [

{ "en_US": "Do you want to do something else?"}

]

}

}

# Interaction Flow with launcher, using chatbots:

"interactionFlow":

{

"type": "WithLauncher",

"welcoming":

{

"override": true,

"chatbotConfiguration":

{

"engineName": "DialogFlow",

"connectionConfiguration":

{

"serviceAccountFileName": "mywelcomingbot.json",

"platformName": ""

}

}

},

"launcher":

{

"introduction": [

{ "en_US": "What do you want to do?" },

{ "en_US": "What can I do for you?" }

],

"explanationValidation": [

{ "en_US": "Do you want me to show you my services?"}

],

"explanationIntroduction": [

{ "en_US": "Here are my services" }

],

"explanationFinalTransition": [

{ "en_US": "and finally" }

],

"doSomethingElseValidation": [

{ "en_US": "Do you want to do something else?"}

]

},

"conclusion":

{

"override": true,

"chatbotConfiguration":

{

"engineName": "Inbenta",

"connectionConfiguration":

{

"inbentaApiKey": "xxxxxx",

"inbentaPrivateKeyFileName": "myinbentakey.key"

}

}

},

},

# IncitationsScenarioConfiguration

This is for configuring the incitations scenario of an interaction flow. See the interaction flow concept section for more information.

"incitations":

{

"incitationRampups": [

<IncitationRampupConfiguration>

...

<IncitationRampupConfiguration>

],

"timeInSocialZoneBeforeIncitations" : <DistributedTime>,

"timeBetweenRampups" : <DistributedTime>,

"timeBetweenIncitationsInRampup" : <DistributedTime>

}

- incitationRampups: rampup of actions done by the character to call over the user.

- type = List<IncitationRampupConfiguration>

- timeInSocialZoneBeforeIncitations: time the user has to spend in the social zone before launching the incitations.

- type = DistributedTime

- default value = 2 seconds

- timeBetweenRampups: time to wait between two IncitationRampup.

- type = DistributedTime

- default value = inbetween 10 seconds and 15 seconds

- timeBetweenIncitationsInRampup: time to wait between two Incitation.

- type = DistributedTime

- default value = inbetween 3 seconds and 6 seconds

# Incitation Rampup Configuration

{

"incitations" : [

<IncitationConfiguration>

...

<IncitationConfiguration>

],

"failReaction" : <SpoonCustomPayload>,

"successReaction" : <SpoonCustomPayload>,

"timeBeforeNextRampup" : <DistributedTime>,

"timeBetweenIncitationsInRampup" : <DistributedTime>

}

- incitations: list of actions (sequential) done by the character to call over the user.

- type = List<IncitationConfiguration>

- failReaction: action done when the user did not enter the personal zone and there is no incitation left to do.

- type = Spoon custom payload as described in the SPooN Specific Messages of the chatbot section.

- successReaction: default action done when the user enters the personal zone.

- type = Spoon custom payload as described in the SPooN Specific Messages of the chatbot section.

- timeBeforeNextRampup: override the time to wait until the next IncitationRampup.

- type = DistributedTime

- timeBetweenIncitationsInRampup: override the time to wait between two Incitation.

- type = DistributedTime

# Incitation Configuration

{

"sollicitation" : <SpoonCustomPayload>,

"successReaction" : <SpoonCustomPayload>,

"timeBeforeNextIncitation": <DistributedTime>

}

- sollicitation: action done by the character to call over the user.

- type = Spoon custom payload as described in the SPooN Specific Messages of the chatbot section.

- successReaction: action done when the user enters the personal zone. Overrides the one in IncitationRampupConfiguration.

- type = Spoon custom payload as described in the SPooN Specific Messages of the chatbot section.

- timeBeforeNextIncitation: override the time to wait until next Incitation.

- type = DistributedTime

# DistributedTime

If the field "value" is set, the distributed time value will be fixed.

Otherwise if the field "min" and "max" are set, the distributed time value will be a random number inbetween.

{

"min" : <float>,

"max" : <float>,

"value": <float>

}

- min: minimum time (in seconds).

- type = float

- default value = 0f

- max: maximum time (in seconds).

- type = float

- default value = 0f

- value: fixed time (in seconds).

- type = float

- default value = 0f

# Example

"incitations":{

"incitationRampups":[

{

"incitations":[

{

"sollicitation":

{

"id": "Say",

"text": "Hello, come closer!"

},

"reaction":

{

"id": "Say",

"text": "Cool you came!"

}

},

],

"failReaction":

{

"id": "Say",

"text": "Maybe next time."

}

},

]

}

TIP

Don't forget you can use a List of Skills with the random operator to add more variety to your sollicitations and reactions.

# WelcomingScenarioConfiguration

This type corresponds to the configuration of the welcoming scenario of an interaction flow.

"welcoming":

{

"override": <bool>,

"chatbotConfiguration": <ChatbotConfiguration>,

"welcomingSentences": [

<LocalizableString>

...

<LocalizableString>

],

"timeout": <float>

}

- override: to specify if using default welcoming or your own

- type: bool

- possible values:

- true: use your own welcoming scenario by connecting a chatbot

- false: use the default welcoming (but you can still customize the WelcomingSentences)

- chatbotConfiguration: connection to your chatbot (if override = true)

- type = ChatbotConfig

- welcomingSentences: sentence said by Spoony in the welcoming if default version is used (if override = false)

- type = List<LocalizableString>

- timeout: (optional, 10s by defaults) time in seconds after which - if no user is detected by the 3d camera - the scenario quits.

WARNING

When running SPooN software without a 3d camera, or if no detection zone has been set, the timeout is always set at 20s (and is detected by the fact there's no interaction: no speech, no touch).

# FullScreenImagesConfiguration

During the incitation, SPooNy displays the images defined in "dynamicImages" in a caroussel like fashion. The image in "mainImage" is used if no "dynamicImages" are set.

During the Incitations, SPooNy displays the image set in "mainImage" until the person leaves or enters the personnal zone.

"fullScreenImages":

{

"duringAutonomy": <bool>,

"duringIncitations": <bool>,

"mainImage": <FullScreenImageConfiguration>,

"dynamicImages": [

<FullScreenImageConfiguration>

...

<FullScreenImageConfiguration>

]

}

- duringAutonomy: to specify if we use fullscreen images during Autonomy.

- type: bool

- duringIncitations: to specify if we use fullscreen images during Incitations.

- type = bool

- mainImage:the image that will be displayed during Welcoming and Autonomy if no dynamicImages is set.

- type = FullScreenImageConfiguration

- dynamicImages: the images that will be displayed during Autonomy (carrousel).

- type = List<FullScreenImageConfiguration>

# FullScreenImageConfiguration

"fullScreenImage":

{

"imagePath": <string>,

"displayDuration": <float>,

}

- imagePath: path of the image you want to display.

- type: string

- displayDuration: display duration of the image in seconds (only during Autonomy).

- type = float

- default value = 100 seconds

# MainScenarioConfiguration

This type corresponds to the configuration of the main scenario of the interaction flow (only used for an interaction flow with type = "Custom").

"main":

{

"chatbotConfiguration": <ChatbotConfiguration>,

"timeout": <float>,

"validatedSpeechFallback": <bool>

}

- chatbotConfiguration: connection to your chatbot

- type = ChatbotConfig

- timeout: (optional, 10s by defaults) time in seconds after which - if no user is detected - the scenario quits.

- validatedSpeechFallback: (optional, false by default), if set to true, will send the validated speech fallbacks to the chatbot engine as explained in the concept section. You should only have one chatbot with the Validated Speech Fallback mechanism enabled, in your whole configuration file.

WARNING

When running SPooN software without a 3d camera, or if no detection zone has been set, the timeout is always set at 20s (and is detected by the fact there's no interaction: no speech, no touch).

# LauncherScenarioConfiguration

This type corresponds to the configuration of the Launcher scenario of the interaction flow (only used for an interaction flow with type = "WithLauncher").

"welcoming":

{

"introduction": [

<LocalizableString>

...

<LocalizableString>

],

"explanationValidation": [

<LocalizableString>

...

<LocalizableString>

],

"explanationIntroduction": [

<LocalizableString>

...

<LocalizableString>

],

"explanationFinalTransition": [

<LocalizableString>

...

<LocalizableString>

],

"doSomethingElseValidation": [

<LocalizableString>

...

<LocalizableString>

],

}

- introduction : sentence said by Spoony when starting the Launcher (before displaying available services

- type = List<LocalizableString>

- default:

[{

"en_US": "How can I help you?",

"fr_FR": "Comment puis-je t'aider ?"

}]

- explanationValidation: question asked by Spoony proactively to validate the need of service explanation

- type = List<LocalizableString>

- default:

[{

"en_US": "Do you want me to tell you what we can do ?",

"fr_FR": "Veux tu que je te décrive ce que nous pouvons faire ensemble ?"

}]

- explanationIntroduction: sentence said by Spoony before describing one by one the services (using Explanation defined in Service config)

- type = List<LocalizableString>

- default:

[{

"en_US": "We can do a lot together",

"fr_FR": "On peut faire plein de choses tous les deux"

}]

- explanationFinalTransition: sentence said by Spoony before describing the last service

- type = List<LocalizableString>

- default:

[{

"en_US": "And finally",

"fr_FR": "Et pour finir"

}]

- doSomethingElseValidation: sentence said by after the end of a service to check if user wants to do something else

- type = List<LocalizableString>

- default:

[{

"en_US": "Do you want to do something else?",

"fr_FR": "Veux-tu faire autre chose ?"

}]

# ServiceConfiguration

This type corresponds to the configuration of a service, that will be loaded in the Launcher or outside (based on the value of field canBeProposed).

{

"name": <string>,

"canBeProposed": <bool>,

"iconName": <string>,

"timeout": <float>,

"trigger": <LocalizableString>,

"explanation": <LocalizableString>,

"chatbotConfiguration": <ChatbotConfiguration>,

"validatedSpeechFallback": <bool>

}

- name: internal reference for the service (for example MyBestService)

- type: string

- canBeProposed: whether or not you want your service to be visible and proposed in the Launcher (if one is configured in the interaction flow)

- type: bool

- possible values:

- true: the service is visible and proposed in the Launcher

- false: the service is not visble nor proposed in the Launcher but can still be triggered (from intent matching)

- iconName: the name of the icon that will be displayed in the Launcher for your main entry

- type: string

- possible values:

- for the moment the available iconName are: [“calendar”, “candidate”, “car”, “car2”, “form”, “foodfinder”, “jokes”, “learning”, “map”, “matcha”, “motivation”, “news”, “orientation”, “registration”, “sms”, “startups”, “takethepose”]

- other icons can be added on demand

- timeout: time in seconds after which the robot will exit the scenario if the user has left the personal zone. Usually 10 to 15s.

- type: float

- trigger: sentence that will be displayed in the Launcher (at the same time as the icon) as an example of trigger for the service (if a Launcher is present in the interaction flow)

- type: LocalizableString

- explanation: sentence that the character will use to describe the service to the user during the Explanation (if a Launcher is present in the interaction flow)

- type: LocalizableString

- chatbotConfiguration: connection to your chatbot

- type = ChatbotConfig

- validatedSpeechFallback: (optional, false by default), if set to true, will send the validated speech fallbacks to the chatbot engine as explained in the concept section. You should only have one chatbot with the Validated Speech Fallback mechanism enabled, in your whole configuration file.

# ConclusionScenarioConfiguration

This type corresponds to the configuration of the Conclusion scenario of the interaction flow (only used for an interaction flow with type = "WithLauncher").

"conclusion":

{

"override": <bool>,

"chatbotConfiguration": <ChatbotConfiguration>,

"timeout": <float>

}

- override: to specify if using default conclusion or your own

- type: bool

- possible values:

- true: use your own conclusion scenario by connecting a chatbot

- false: use the default conclusion scenario

- chatbotConfiguration: connection to your chatbot (if override = true)

- type = ChatbotConfig

- timeout: (optional, 10s by defaults) time in seconds after which - if no user is detected - the scenario quits.

WARNING

When running SPooN software without a 3d camera, or if no detection zone has been set, the timeout is always set at 20s (and is detected by the fact there's no interaction: no speech, no touch).

# ChatbotConfiguration

This type corresponds to the configuration to connect a chatbot. See documentation about Chatbot Connection

"chatbotConfiguration":

{

"engineName": <string>,

"startTrigger": <string> (optional),

"connectionConfiguration":

{

<specific fields depending on the engine>

}

}

- engineName: name of the chatbot engine to connect

- possible values:

- "DialogFlow"

- "Inbenta"

- possible values:

- startTrigger: (optional) if you don't want to use

Spoon_GenericStartas the event trigger of the starting bot, fill this field in with your custom name. And then you can use this trigger in Dialogflow as an Event to trigger the start of the chatbot. - connectionConfiguration: specific connection fields. See documentation of the chatbot engine you want to connect.

# LocalizableString

This type corresponds to a content that will be said or understood by the character. In order to be compatible with Language switch, this type stores the translations in the different languages, in order to be able to retrieve the right translation based on the current language of the character.

{

"en_US": <string>,

"fr_FR": <string>

}

- en_US: English version of the content

- fr_FR: French version of the content

# Specific configuration files

# Specific Contents

# Usage

It is possible to quickly define answers to a list of specific questions, that will be loaded from local configuration files on the device.

WARNING

These questions and answers are defined outside of the main interaction flow, so it could cause some understanding complication for the users. We recommend to use Chatbots instead to integrate specific questions/answers.

# Link with main configuration

Specific content files are loaded only if they are linked in the release.conf file, using the "specificContents") field.

# Format & content

- CSV format, with ";" as separator character

- One line per entry

- Each line has the following elements: Language;WordSpotting;Input;Output

- Language: Language code of the content

- WordSpotting: 0 or 1

- 0: word spotting is disabled, the character answers with the Output only if the user says exactly the Input for

- 1: word spotting is enabled the character answers with the Output only if the sentence said by the user contains the Input

- Input: element to be said by the user for the character to react

- Output: content of the reaction of the character in response to the input for long contents in Output, use "//" to split this Output in multiple sentences that are said sequentially by the character

# Example :

Language;WordSpotting;Input;Output

fr_FR;0;Parle moi de Jérome;C'est un mec génial // Je l'adore!

en_US;0;Tell me more about Jérome;He is such a cool guy // I love him

fr_FR;1;choucroute garnie;Ah tu parle de choucroute // J'adore ça moi!

# Specific Dictation Constraints

# Usage

You can help the speech recognition (dictation) to detect specific non-usual words or words with specific spelling (for example for brand or product names) using these configuration files.

# Link with main configuration

Specific Dictations Constraints files are loaded only if they are linked in the release.conf file, using the "specificDictationConstraints" field (see above).

# Format & content:

- CSV format, with ";" as separator character

- One line per entry

# Example:

Dictation Constraints

Spoony

SpecificBrandName

# Specific Grammar Pronunciations

# Usage

You can help the speech recognition (grammar) to detect specific contents by providing their pronunciation using these configuration files.

# Link with main configuration

Specific Grammar Pronunciation files are loaded only if they are linked in the release.conf file, using the "specificGrammarPronunciations" field.

# Format & content:

- CSV format, with ";" as separator character

- One line per entry

- Each line has the following elements: Language;Input;Correction

- Language: Language code of the content

- Input: the text to be recognized

- Correction: a more literal/phonetic spelling of the input in the chosen language to help the grammar based speech recognition

# Example:

Language;Input;Correction

fr_FR;Spoon;Spoun

fr_FR;Coony;Couny

fr_FR;Birdly®;beurdly

fr_FR;km/h;kilomètre heure

en_US;km/h;kilometer per hour

fr_FR;€;euros

en_US;€;euros

# Specific Pronunciations

# Usage

You can improve the pronunciation of specific words by the character by providing their phonetic spelling in these configuration files.

# Link with main configuration

Specific Pronunciation files are loaded only if they are linked in the release.conf file, using the "specificPronunciations" field.

# Format & content:

- CSV format, with ";" as separator character

- One line per entry

- Each line has the following elements: Language;VoiceName;Input;Pronunciation

- Language: Language code of the content

- VoiceName: name of the voice that will use this specific pronunciation (use "All" to apply the pronunciation for all the voices of the chosen language)

- Input: the text for which the pronunciation is improved

- Pronunciation: a more literal/phonetic spelling of the input in the chosen language for the pronunciation

# Example:

Language;VoiceName;Input;Pronunciation

fr_FR;All;&;et

fr_FR;All;Spoon;Spoun

fr_FR;All;streaming;striming

en_US;All;Tour Eiffel;tour hey fell