# Chatbots

SPooN's solution can be connected to different chatbots.

# Dialogflow

Spoon solution can be connected to chatbots (or agents) created in DialogFlow ES.

In order to create a new chatbot, you can follow the tutorial proposed by Google (opens new window)

# How to link a Spoon release with DialogFlow chatbots ?

# Google & DialogFlow authentication system using Service Accounts

For a service to be allowed to communicate with a DialogFlow chatbot, this service needs to use validated credentials. To do so, Google proposes a system using “Service accounts”, that corresponds to validated identities to which we can add the needed permissions to communicate with the DialogFlow chatbot.

TIP

In order for Spoon software to be able to communicate with your DialogFlow chatbot, you will need a Service Account.

# Generate a service account for your chatbot

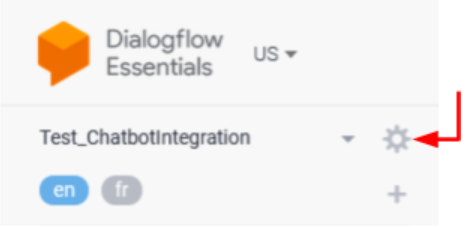

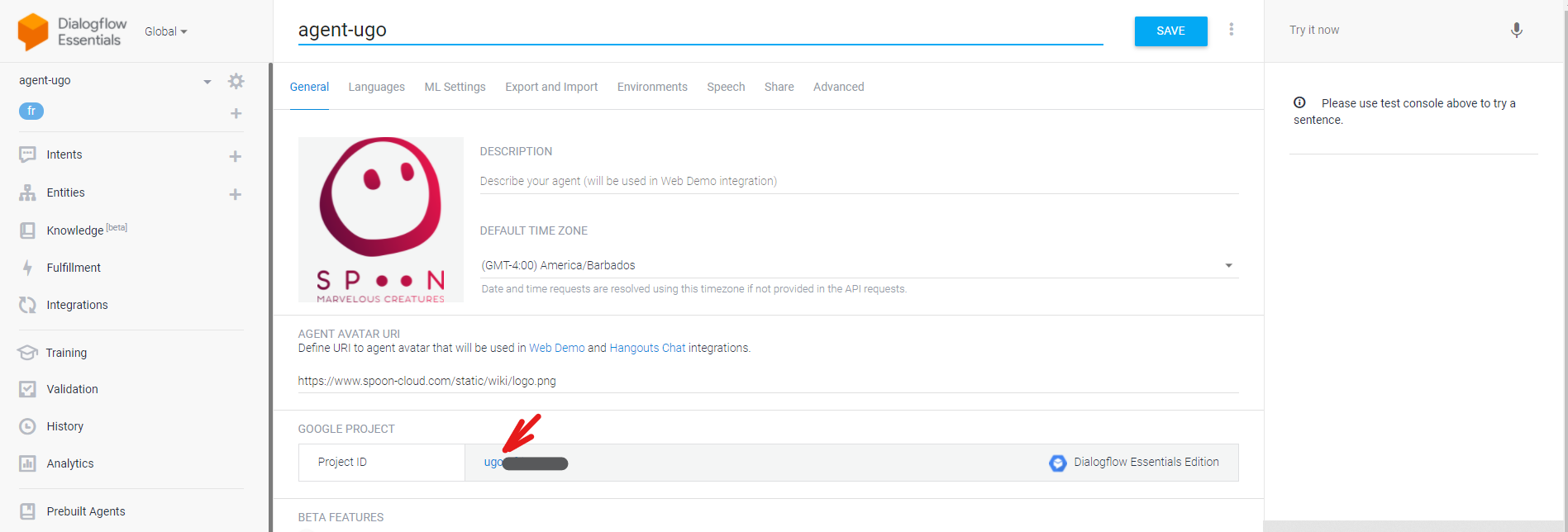

# Retrieve the project ID of your DialogFlow agent

- Go to the parameters of your agent in DialogFlow (opens new window)

- Click on the ProjectID field and it will redirect you to the corresponding project on the Google Cloud Platform

# Create a Service Account with proper role & permissions + Key

Once on Google Cloud Platform (see previous section):

- Go to Menu > IAM & Admin > Service Accounts

- Create ServiceAccount

- Click on + Create Service Account

- Give a name to your service account, for example SpoonClient

- Click on Create

- In Select Role, choose role DialogFlow API Client

- Click on Continue

- Do nothing in Grant users access to this service account

- Click on Done

- Create a key for your newly created Service Account

- You should see your Service Account in the displayed list

- Click on the ... button in the Actions column + choose Manage Key > Add key > Create new key

- Choose JSON in the popup + Click on Create

- Download the json file on your computer and place it in the

C:/Program Files/SPooN/developers-1.6.0/release/Conffolder.

TIP

Thanks to this JSON key file, Spoon software will be able to authenticate itself as the Service account, with the right permissions to communicate with your DialogFlow agent.

WARNING

- Do not commit your json file in a git repository ! Everybody will be able to use your identity. Your billing will increase a lot. (if you want to git your configuration, use a .gitignore to remove your json file)

- Only add the DialogFlow API Client role in IAM. In case of unwanted share of identity, the hacker is limited by this role.

# Link DialogFlow chatbots in the interaction flow

You can connect your chatbots at different steps of the interaction flow. See documentations about the Interaction Flow concepts and the technical details on how to customize your interaction flow using chatbots.

The connection of your chatbot relies on the definition of a ChatbotConfiguration. For DialogFlow chatbots:

"chatbotConfiguration":

{

"engineName": "DialogFlow",

"connectionConfiguration":

{

"serviceAccountFileName": <string>,

"platformName": <string>,

"environmentName": <string>

}

}

- engineName: set it to “DialogFlow” as your are using DialogFlow chatbot engine

- connectionConfiguration: will include the informations to properly connect Spoon software with your DialogFlow Chatbot

- serviceAccountFileName: full name (including the extension) of your service account JSON file "projectID-XXX.json" (make sure this file has actually been copy/pasted next to the release.conf in the Spoon release configuration folder:

C:/Program Files/SPooN/developers-1.6.0/release/Conf) - platformName: (for expert usage) allow you to choose which DialogFlow platform messages from your agent to use in this connection with Spoon software

- DialogFlow proposes multiple integration platforms (Default, Slack, Telegram, …) with different message types, Spoon can be connected to all of them

- For non-experts usage, we recommend to use the Default platform, for this, you just need to set an empty platformName : “platformName”: “” or not set the platformName variable

- environmentName: makes it possible to select a specific environment for your chatbot if you are using versions and environments (see documentation (opens new window))

- you just need to specify the name of the environment in this field.

- Examples:

- “environmentName”: “Production”

- if you haven't published a version, you can use "environmentName": "Draft"

- serviceAccountFileName: full name (including the extension) of your service account JSON file "projectID-XXX.json" (make sure this file has actually been copy/pasted next to the release.conf in the Spoon release configuration folder:

# How to bind the main entry of a Service with your DialogFlow chatbot

As explained in the technical documentation about ServiceConfiguration, it is possible to connect a chatbot to declare a service. If the field canBeProposed of your service is set to true, it will create a main entry point, that will be accessible in the Launcher

- by clicking on its icon

- by saying its trigger example

TIP

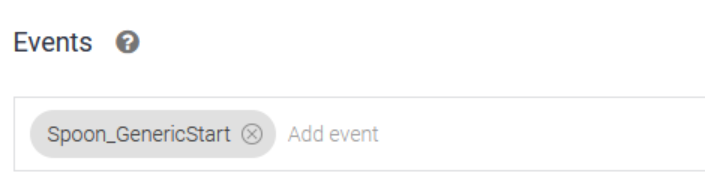

This will send the event "Spoon_GenericStart" to your chatbot. You can also customize it in the chatbotConfiguration to use your own event name.

For your chatbot to react to this event, you just need to add this event "Spoon_GenericStart" (or the event trigger you used in your customization) to one of the intent of your agent

# How to send custom messages to SPooN

Once you've matched your intent with Dialogflow, the responses that you add to that detected intent will be sent to SPooN as actions to be executed. You have different ways of controlling SPooNy from the SDK.

SPooN will read the text of the response that you wrote in Dialogflow.

But if you want to make better use of SPooN's capabilities (make SPooNy smile, show images, multi-choice question,...), you should use SPooN's custom payloads. Simply click the Add Responses button in Dialogflow's intent and choose Custom Payload. You can add multiple payloads as actions to the same intent (by adding multiple CustomPayload type responses).

If you have multiple responses in the same intent, then SPooNy will execute the corresponding actions one after the other.

The payloads are described in SPooN specific message.

# DialogFlow specific tips & tricks

# Open Question DialogFlow

If you're capturing some freespeech user input (such as a name for example), use the OpenQuestion payload as described here in your intent.

Let's use the following example:

{ "spoon":

{

"id": "OpenQuestion",

"questionId": "Name",

"question": "What is your name?"

}

}

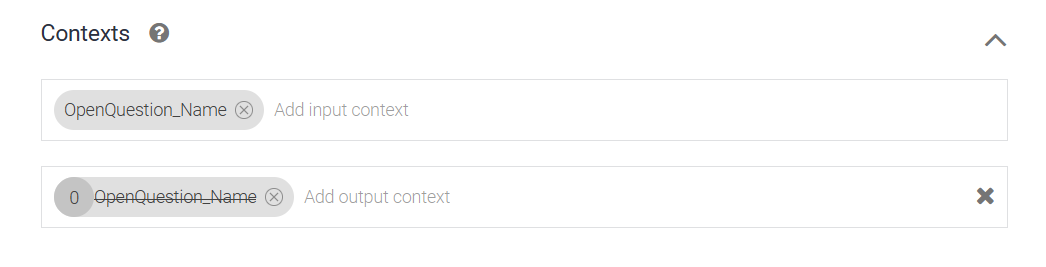

For this mechanism to work, do the following:

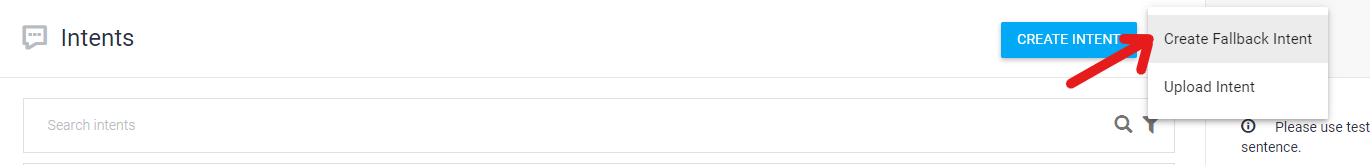

- Create a fallback intent (click on the "..." icon next to the

Create Intentbutton and chooseCreate Fallback Intent) - Name this intent as you wish (it has no impact on the mechanism)

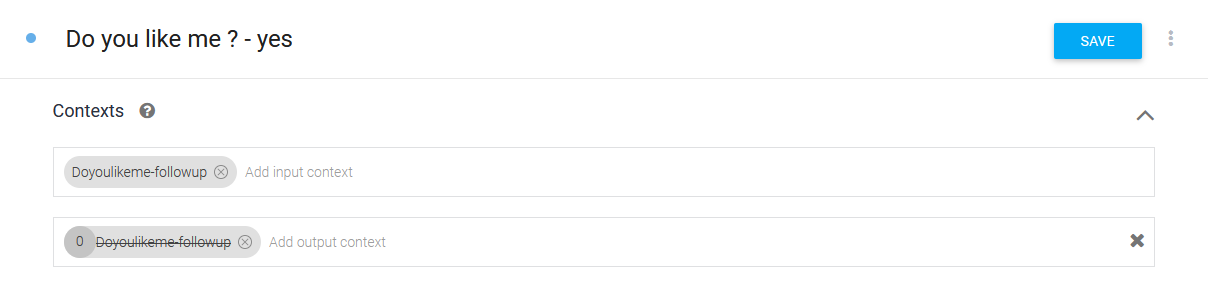

- Add an input context to match your open question. The name of the input context is normalized:

OpenQuestion_+ thequestionIdthat you chose in the payload (soOpenQuestion_Namein our example). - Make sure this context is removed when the intent is triggered by setting its lifespan to 0 in the output context field.

Your fallback intent will be triggered when the user answers the open question and you will be able to retrieve the content of their answer in the text of the query, using fulfillments (opens new window) with DialogFlow Inline Editor (opens new window) or your own Webhook service (opens new window).

Check the example to see how this can be achieved.

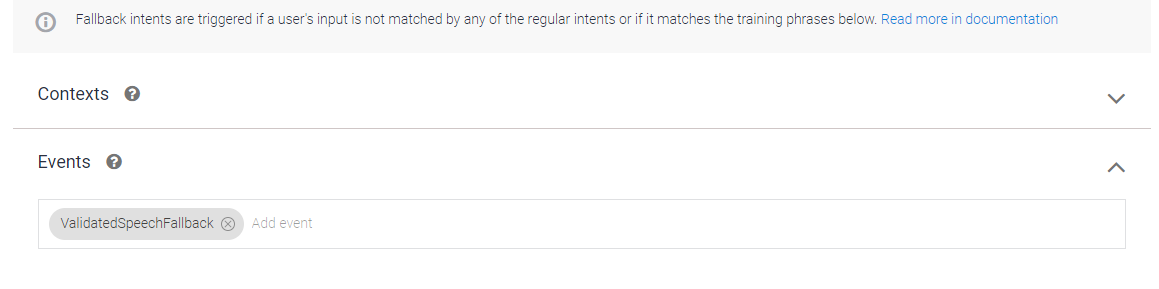

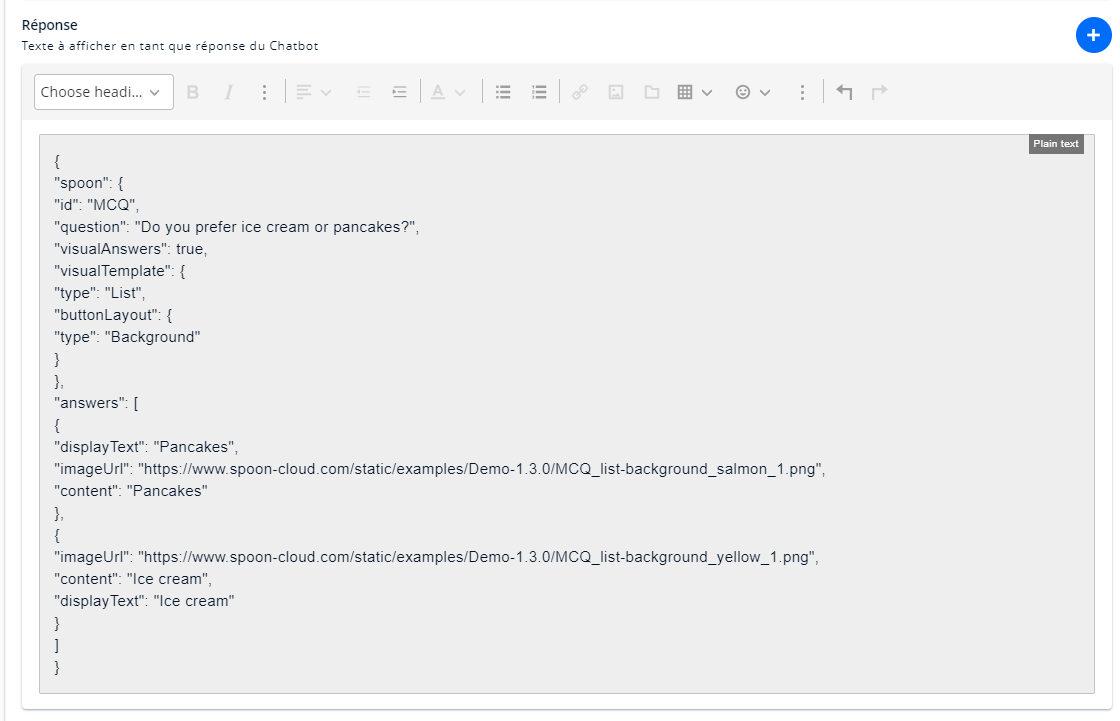

# Creating a Speech Fallback mechanism - Dialogflow

Check the concept section to understand what the validated speech fallback mechanism is.

First create a new Fallback Intent:

For the fallback to trigger every time the user selects an unrecognized input, add an Event to the fallback with the following text ValidatedSpeechFallback:

You can now create the behavior you want for this fallback mechanism by adding responses as you would for a normal event.

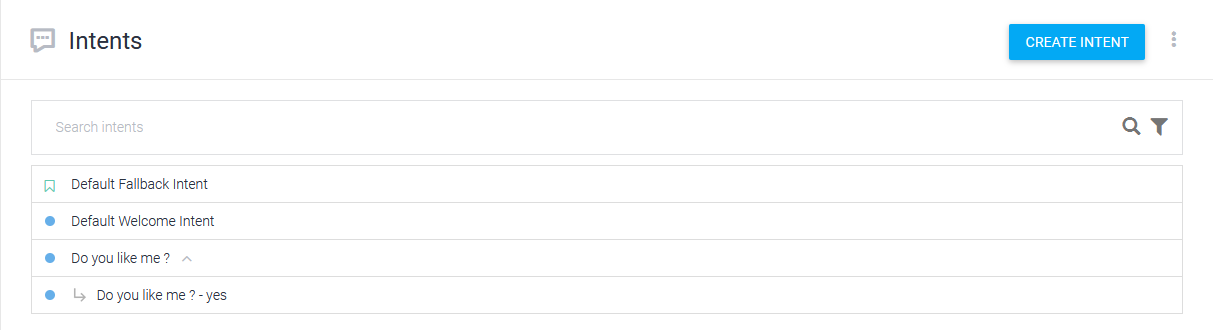

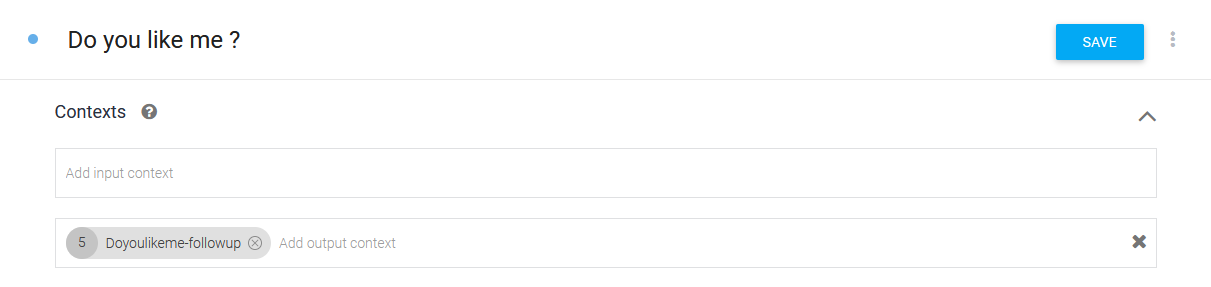

# Multiple Choice Questions DialogFlow

To create a MCQ (Mulitple Choice Question) from Dialogflow, do the following:

- create one intent for the question. Then add a custom payload containing the MCQ as documented here

- create one follow-up intent for each answer to the question: when you hover over the question's intent in Dialogflow, you see the Add follow-up intent button on the intent itself. Once the follow-up intent has been created it appears nested under the question intent.

- in the question intent, update the automatically created context lifespan to 5 instead of 2.

We use a longer lifespan for the MCQ as if the user doesn't answer straight away - for example they're chatting with someone else - the unmatched sentences will still be sent to Dialogflow but they won't trigger the MCQ answer (rightfully so), and the lifespan will expire before the user actually triggers the MCQ answer (which is not triggered anymore as the context has expired).

- in the follow-up intents (the answers), reset the context by setting its lifepan to 0 in the output context. This signifies that we are out of the MCQ so that your answer intent don't trigger anymore until you're back in the MCQ.

# Dialogflow examples

Let's take two examples on Dialogflow :

- Dialogflow example 1 : a withLauncher interaction flow

- Dialogflow example 2 : a custom interaction flow

# Dialogflow example 1

Let's build the following example together on Dialogflow.

Download the zipped chatbot here (opens new window).

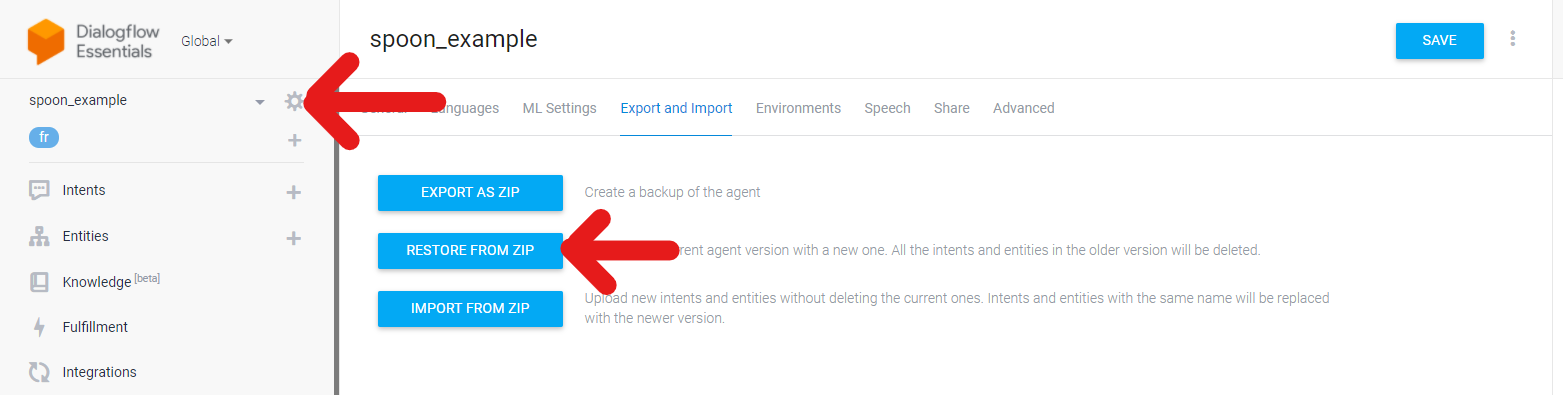

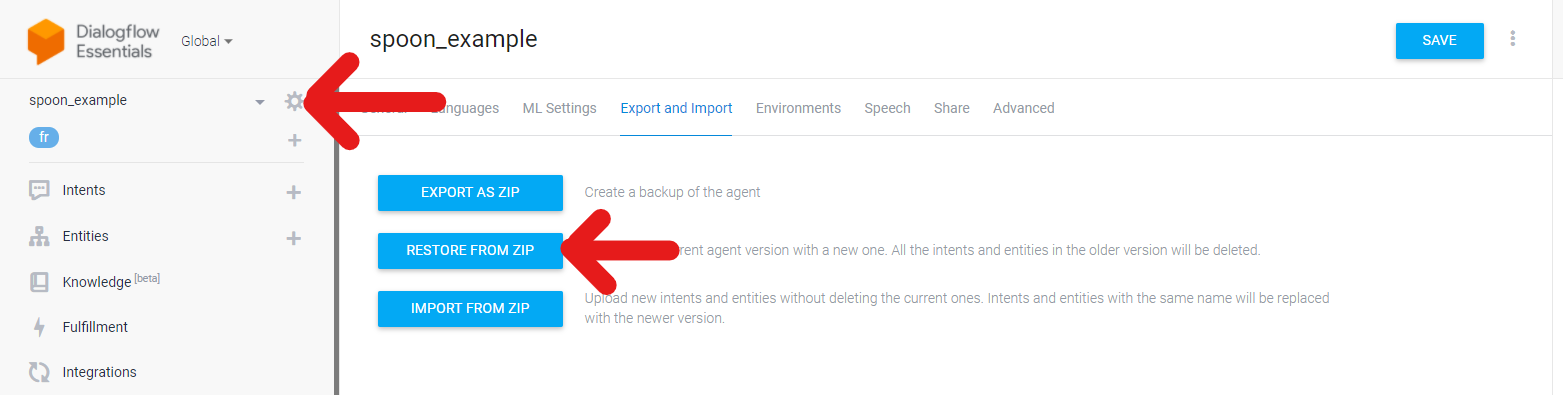

Create a new chatbot in Dialogflow - make sure you use the correct default language, French in this example - and import the downloaded zipped example. As shown on the screenshot below, click on the wheel at the top left, then Export and Import and choose the Restore from zip option.

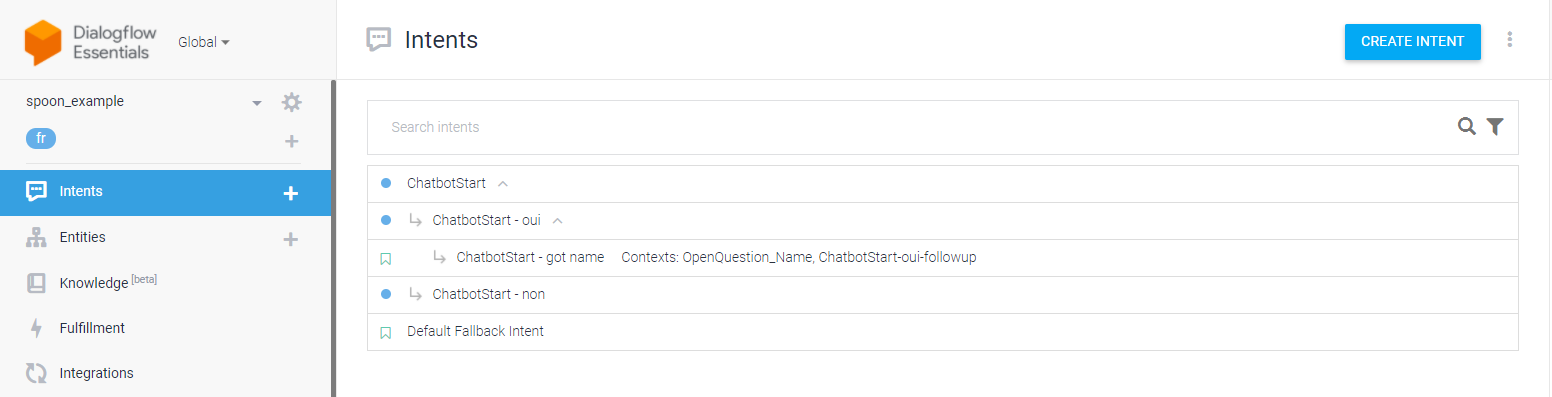

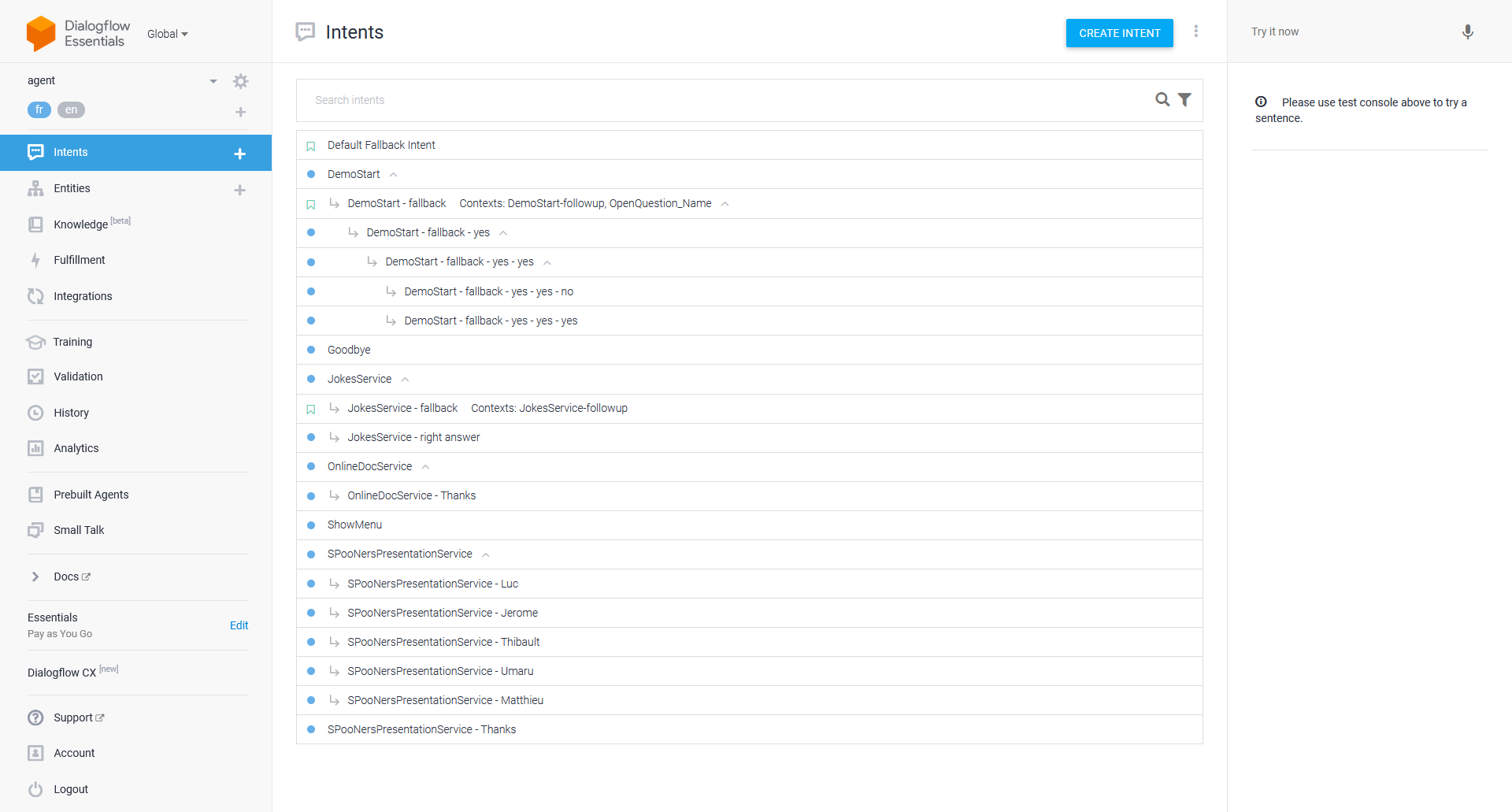

After the import, go back to the intent page. You now have access to the following intents. Feel free to look at each intent to see the different ways to interact with SPooNy: speaking, displaying pictures, asking questions, ...

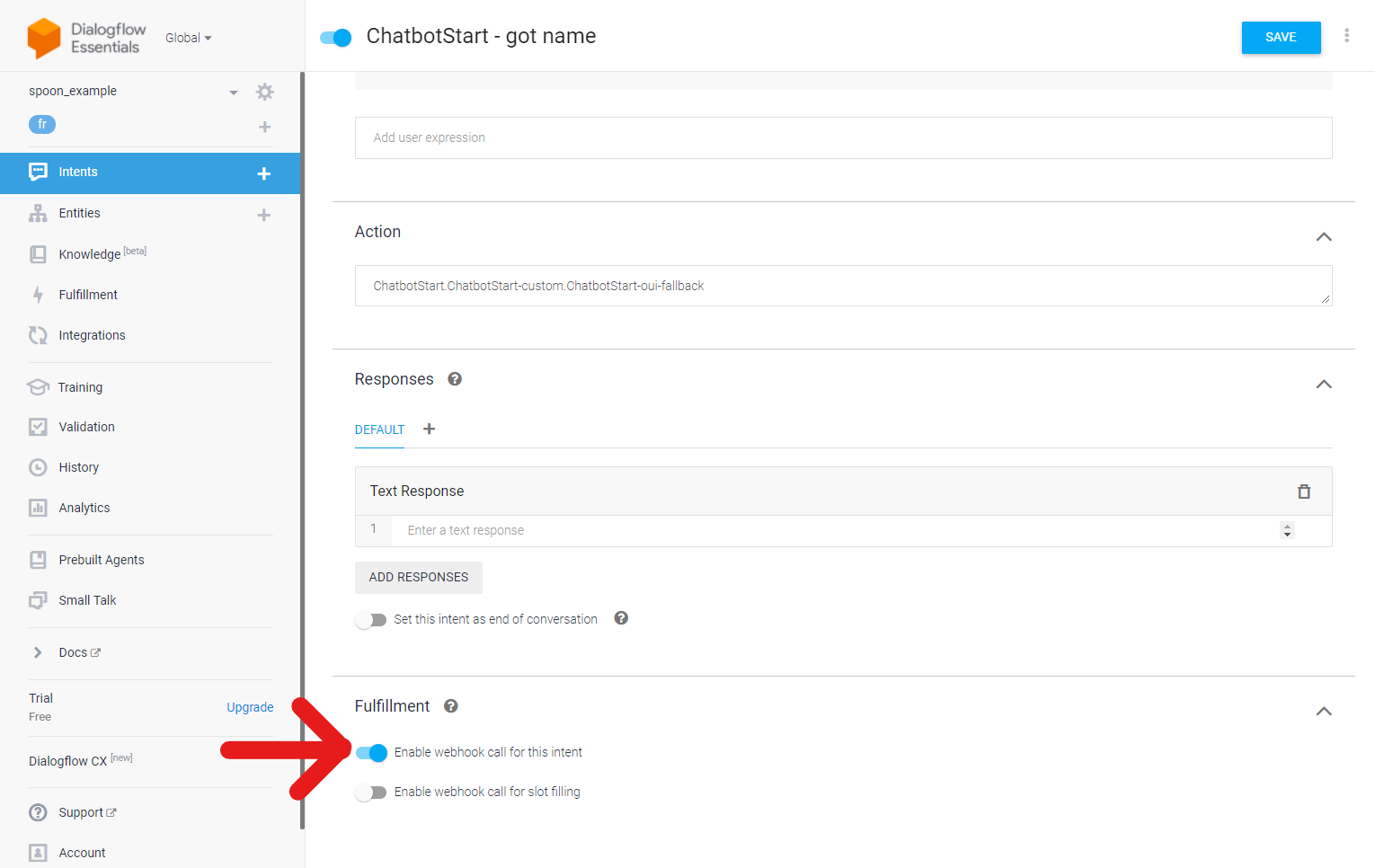

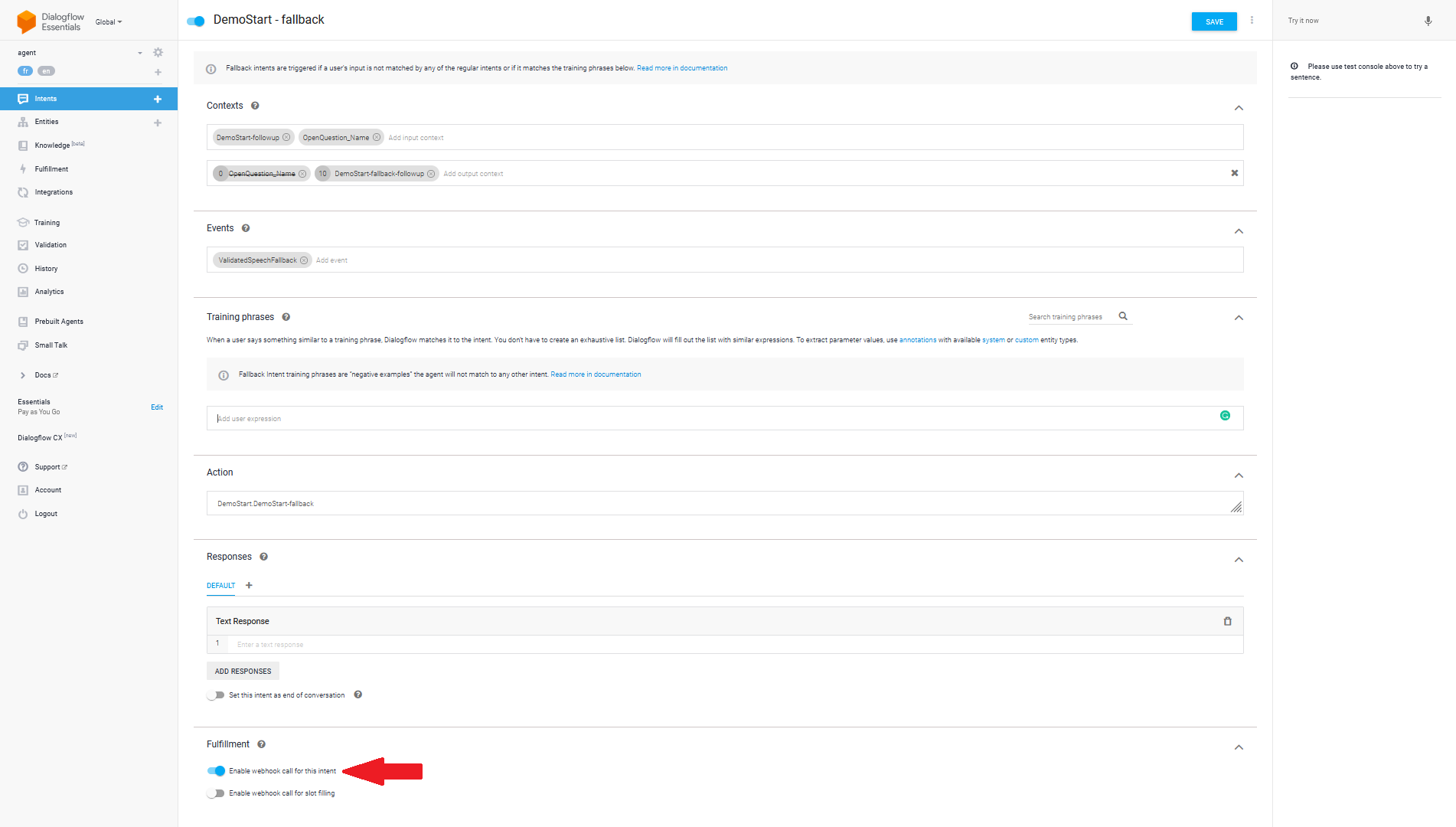

Let's create a Fulfillment to read the user name from the open question answer. This happens in the ChatbotStart - got name fallback intent (as explained in the Open Question Dialogflow section). Notice that the Enable webhook call for this intent is checked.

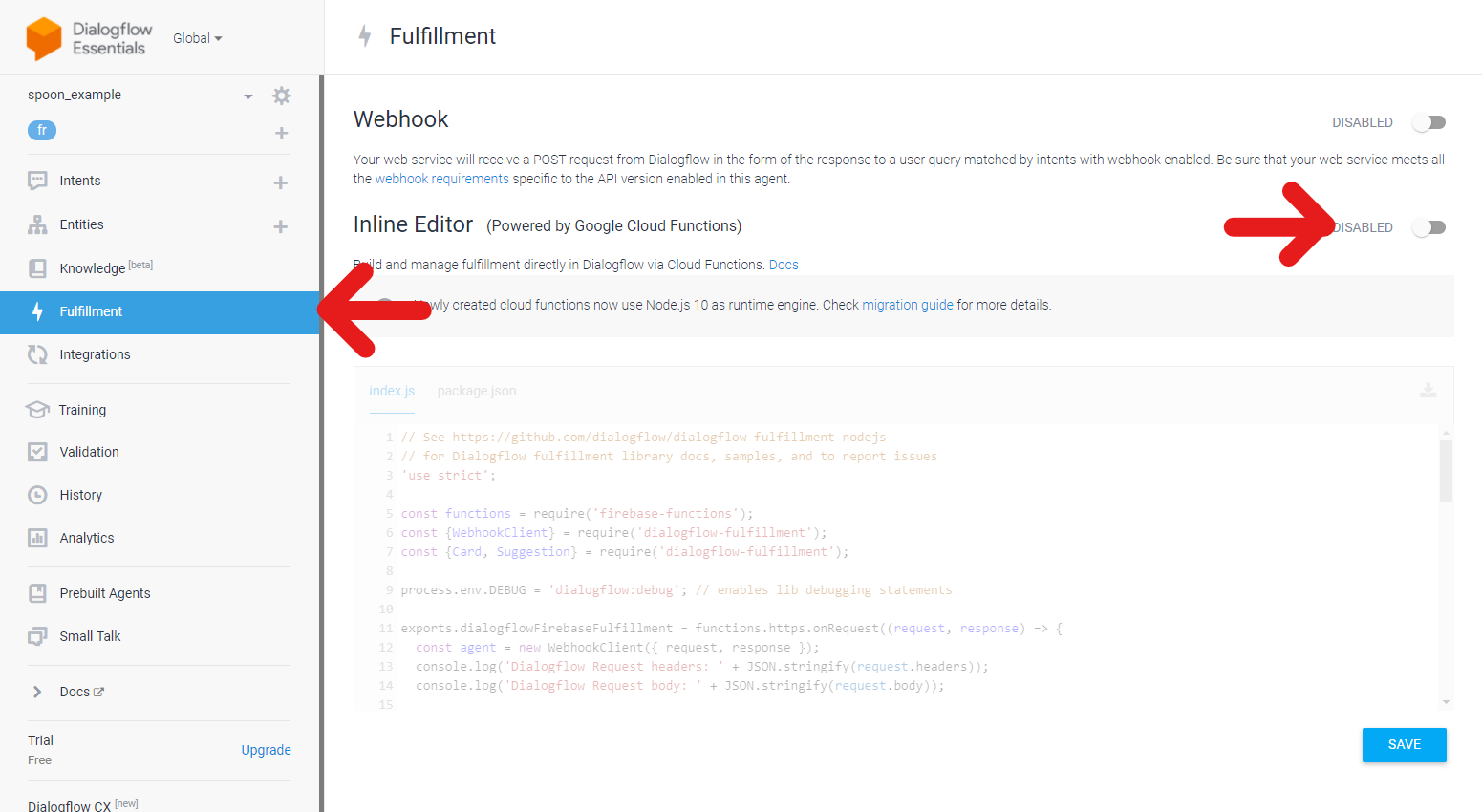

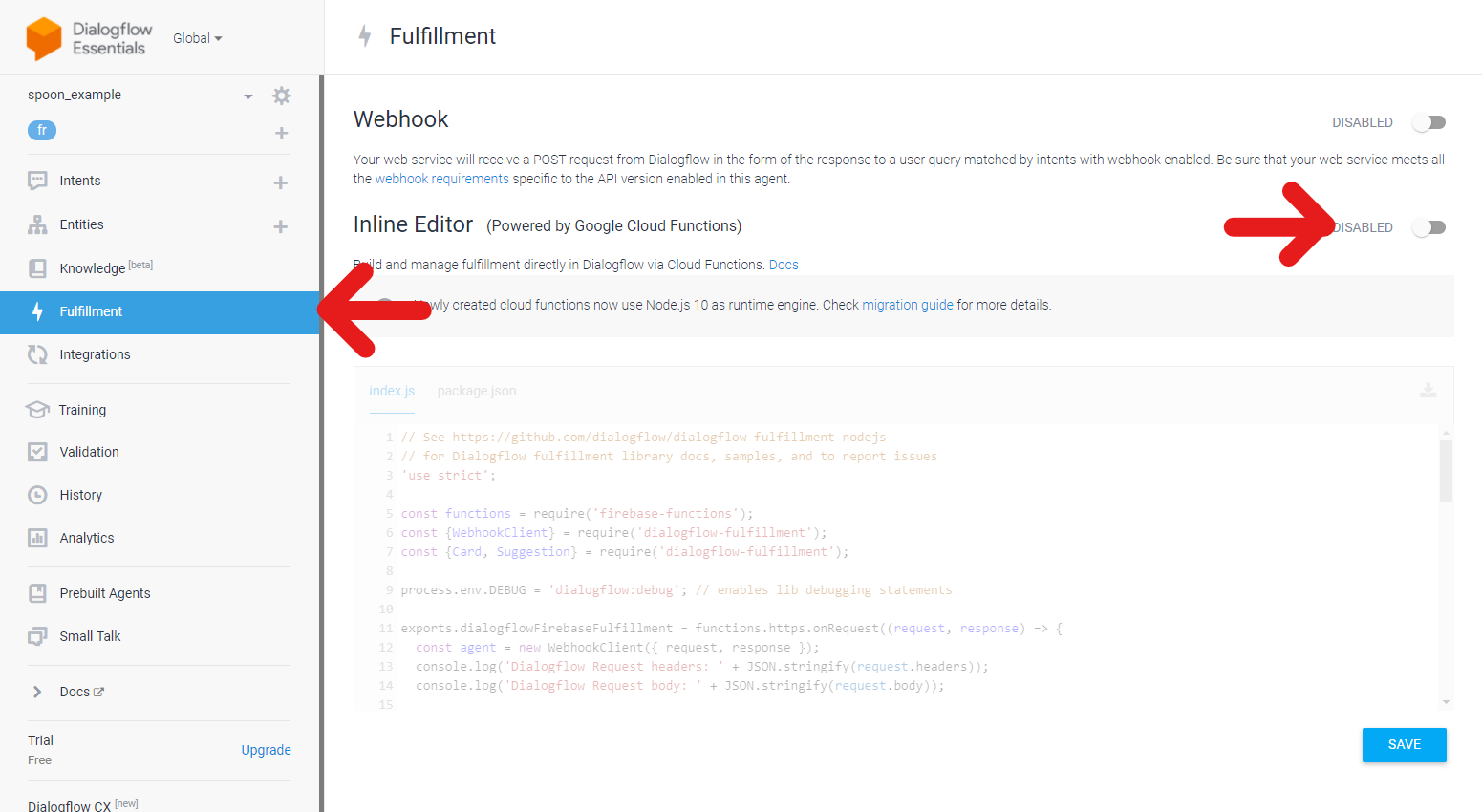

Go to the Fulfillment. For this example, we use the convenient inline editor.

Once enabled, replace the index.js in the inline editor with the code below. Read the comments to understand what it does.

'use strict';

//imports

const functions = require('firebase-functions');

const {WebhookClient, Payload} = require('dialogflow-fulfillment');

const {Card, Suggestion} = require('dialogflow-fulfillment');

process.env.DEBUG = 'dialogflow:debug'; // enables lib debugging statements

exports.dialogflowFirebaseFulfillment = functions.https.onRequest((request, response) => {

const agent = new WebhookClient({ request, response });

function openquestionresponse (agent) {

// we read the input as it contains the username (typed in on the keyboard from our openquestion)

let username = agent.request_.body.queryResult.queryText;

// This text will be read by SPooNy

agent.add(`C'était vraiment chouette de faire ta rencontre ${username} ! J'ai fini ma démo. Passe une belle journée!`);

const payload = {

spoon: {

id: "EndScenario",

status: "Succeeded",

afterEndType: "Active",

message: "demo ended"

}

};

//We can also send our custom SPooN payloads

agent.add(new Payload(agent.UNSPECIFIED, payload, {rawPayload: true, sendAsMessage: true}));

console.log('Added the payload response: ' + JSON.stringify(payload));

}

// Run the proper function handler based on the matched Dialogflow intent name

let intentMap = new Map();

//this is where we map our fonction openquestionresponse to our intent: "ChatbotStart - got name"

intentMap.set('ChatbotStart - got name', openquestionresponse);

agent.handleRequest(intentMap);

});

WARNING

There is a bug in the older version of the dialogflow-fulfillment package for sending payloads. Make sure you also replace the package version in the package.json file in the inline editor by this: "dialogflow-fulfillment": "^0.6.1" .

You are now ready to connect your chatbot to your robot. In this case, the fastest way to get it to work is to use SPooN's WithLauncher flow. Here is an example of the services section of the release**.conf file I use for linking my chatbot. Replace the serviceAccountFileName by your own file name that you created - check the Generate a service account for your chatbot section to do so if you haven't already.

"services": [

{

"name": "ServiceTest",

"canBeProposed": true,

"iconName": "learning",

"timeout": 10.0,

"trigger": {

"en_US": "Start the test",

"fr_FR": "Lance le test"

},

"explanation": {

"en_US": "Start the test chatbot",

"fr_FR": "Lance le chatbot de test"

},

"chatbotConfiguration":

{

"engineName": "DialogFlow",

"connectionConfiguration":

{

"serviceAccountFileName": "YOUR_KEY.json",

"platformName": "",

"environmentName": "Draft"

}

}

}

]

You can now test the example by start SPooN's software and saying "lance le test" or sentences that are semantically close to it.

# Dialogflow example 2

Let's build the following example together on Dialogflow.

Download the zipped chatbot here (opens new window).

Create a new chatbot in Dialogflow - make sure you use the correct default language, French in this example - and import the downloaded zipped example. As shown on the screenshot below, click on the wheel at the top left, then Export and Import and choose the Restore from zip option.

After the import, go back to the intent page. You now have access to the following intents. Feel free to look at each intent to see the different ways to interact with SPooNy: speaking, looking at, reacting emotionnally, displaying pictures, asking questions, ...

Let's create a Fulfillment to read the user name from the open question answer. This happens in the DemoStart - fallback fallback intent (as explained in the Open Question Dialogflow section). Notice that the Enable webhook call for this intent is checked.

Go to the Fulfillment. For this example, we use the convenient inline editor.

Once enabled, replace the index.js in the inline editor with the code below. Read the comments to understand what it does.

'use strict';

//imports

const functions = require('firebase-functions');

const {WebhookClient, Payload} = require('dialogflow-fulfillment');

const {Card, Suggestion} = require('dialogflow-fulfillment');

process.env.DEBUG = 'dialogflow:debug'; // enables lib debugging statements

exports.dialogflowFirebaseFulfillment = functions.https.onRequest((request, response) => {

const agent = new WebhookClient({ request, response });

function openquestionresponse_Name (agent) {

// we read the input as it contains the username (typed in on the keyboard from our openquestion)

let username = agent.query;

// SEQUENTIAL PAYLOADS

const payload = {

spoon: {

id: "List",

operator: "Sequential",

skills: [{

id: "ExpressiveReaction",

expression: {

type: "Happy",

intensity: 0.3,

gazeMode: "Focused"

}

},

{

id: "ExpressiveReaction",

expression: {

type: "Happy",

intensity: 1,

gazeMode: "Focused"

}

},

{

question: "Veux-tu que je t'explique ce qu'est un personnage interactif ?",

visualTemplate: {

type: "Grid",

buttonLayout: {

type: "Background"

}

},

visualAnswers: true,

id: "MCQ",

answers: [{

content: "Oui",

displayText: "Bien sur !",

imageUrl: "https://docs.spoon.ai/static/examples/Demo-1.3.0/MCQ_green-square_1.png"

},

{

imageUrl: "https://docs.spoon.ai/static/examples/Demo-1.3.0/MCQ_red-square_1.png",

content: "Montre le menu",

displayText: "Pas vraiment"

}]

}]

}

};

//We can also send our custom SPooN payloads

agent.add(new Payload(agent.UNSPECIFIED, payload, {rawPayload: true, sendAsMessage: true}));

console.log("Added the payload response: "+ JSON.stringify(payload));

}

// Run the proper function handler based on the matched Dialogflow intent name

let intentMap = new Map();

//this is where we map our fonction openquestionresponse to our intent: "DemoStart - fallback"

intentMap.set("DemoStart - fallback", openquestionresponse_Name);

agent.handleRequest(intentMap);

});

WARNING

There is a bug in the older version of the dialogflow-fulfillment package for sending payloads. Make sure you also replace the package version in the package.json file in the inline editor by this: "dialogflow-fulfillment": "^0.6.1" .

You are now ready to connect your chatbot to your robot. In this case, the fastest way to get it to work is to use SPooN's custom interaction flow. Here is an example of the interactionFlow section of the release.conf file I use for linking my chatbot. Replace the serviceAccountFileName by your own file name that you created - check the Generate a service account for your chatbot section to do so if you haven't already.

"interactionFlow":{

"type": "Custom",

"main":{

"chatbotConfiguration":{

"engineName": "DialogFlow",

"connectionConfiguration":{

"serviceAccountFileName": "YOUR_KEY.json",

"platformName": "",

"environmentName": "Draft"

}

}

}

}

To finish the configuration of your chatbot, you should add the following files in your conf folder of your release :

- SpecificDictationConstraints_demo.csv (opens new window)

- TextPronunciation_demo.csv (opens new window)

And, you should link these two files in your release.conf as follows :

"specificDictationConstraints": [

"SpecificDictationConstraints_demo.csv"

],

"specificPronunciations": [

"TextPronunciation_demo.csv"

],

You can now test the example by starting SPooN's software, standing in front of the camera and saying "bonjour" or words that are semantically close to it.

# Inbenta

Inbenta (opens new window)'s platform is connected to SPooN's SDK. Please follow their documentation to learn how to create a chatbot.

# Connecting Inbenta to a SPooN release

# Retrieving your Inbenta credentials

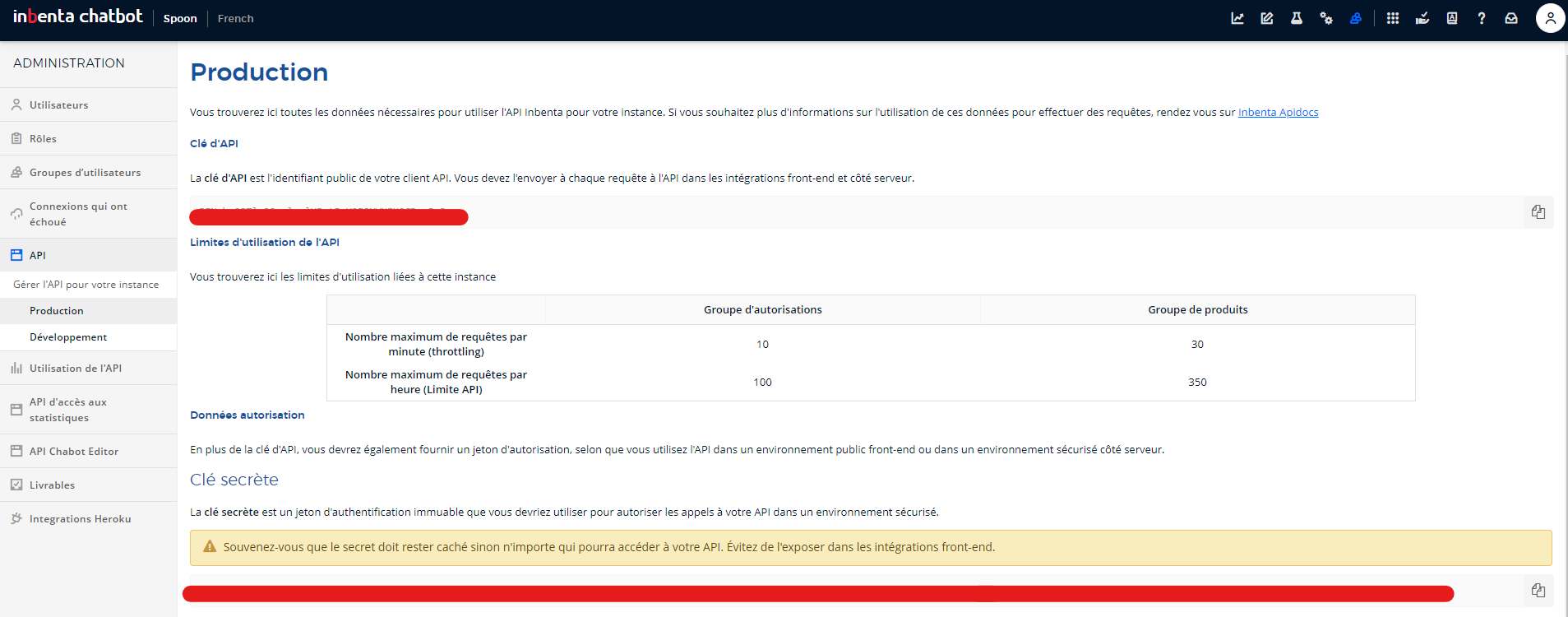

The information you need to connect to Inbenta are on the administration page of your Inbenta platform. Click on the administration icon in the top bar, then on the API in the left bar menu. Choose whether you want to connect to your development or production environment.

You need the following information to connect your Inbenta chatbot to SPooN's SDK:

- inbenta api key: keep that API key handy as you'll use it in the

release.conffile as described in the instructions below - inbenta secret key: simply save that secret key in a file (for example

inbenta.key). Save this file next to therelease.confwhich you can usually find atC:/Program Files/SPooN/developers-1.6.0/release/Conf.

# Link Inbenta chatbots in the interaction flow

You can connect your chatbots at different steps of the interaction flow. See documentations about the Interaction Flow concepts and the technical details on how to customize your interaction flow using chatbots.

The connection of your chatbot relies on the definition of a ChatbotConfiguration. For Inbenta chatbots:

"chatbotConfigurations":

{

"engineName": "Inbenta",

"connectionConfiguration":

{

"inbentaApiKey": <string>,

"inbentaPrivateKeyFileName": <string>

}

}

- engineName: set it to "Inbenta" as your are using Inbenta chatbot engine

- connectionConfiguration: will include the informations to properly connect Spoon software with your Inbenta Chatbot

- inbentaApiKey: The API key that you find in the Inbenta API settings as described above.

- inbentaPrivateKeyFileName: full name of the file that contains your Inbenta private key (make sure this file has actually been copy/pasted next to the release.conf in the Spoon release configuration folder:

C:/Program Files/SPooN/developers-1.6.0/release/Confas explained above). If you used the instructions above, the filename isinbenta.key.

# How to bind the main entry of a Service with your Inbenta chatbot

As explained in the technical documentation about ServiceConfiguration, it is possible to connect a chatbot to declare a service. If the field canBeProposed of your service is set to true, it will create a main entry point, that will be accessible in the Launcher

- by clicking on its icon

- by saying its trigger example

TIP

This will send the event "Spoon_GenericStart" to your chatbot. You can also customize it in the chatbotConfiguration to use your own event name.

For your chatbot to react to this event, you just need to add this event "Spoon_GenericStart" (or the event trigger you used in your customization)to the "DIRECT_CALL" field to one of the intent of your agent

# How to send custom messages to SPooN

SPooN will read the text of the answer that you wrote in Inbenta. SPooNy will show the images as well.

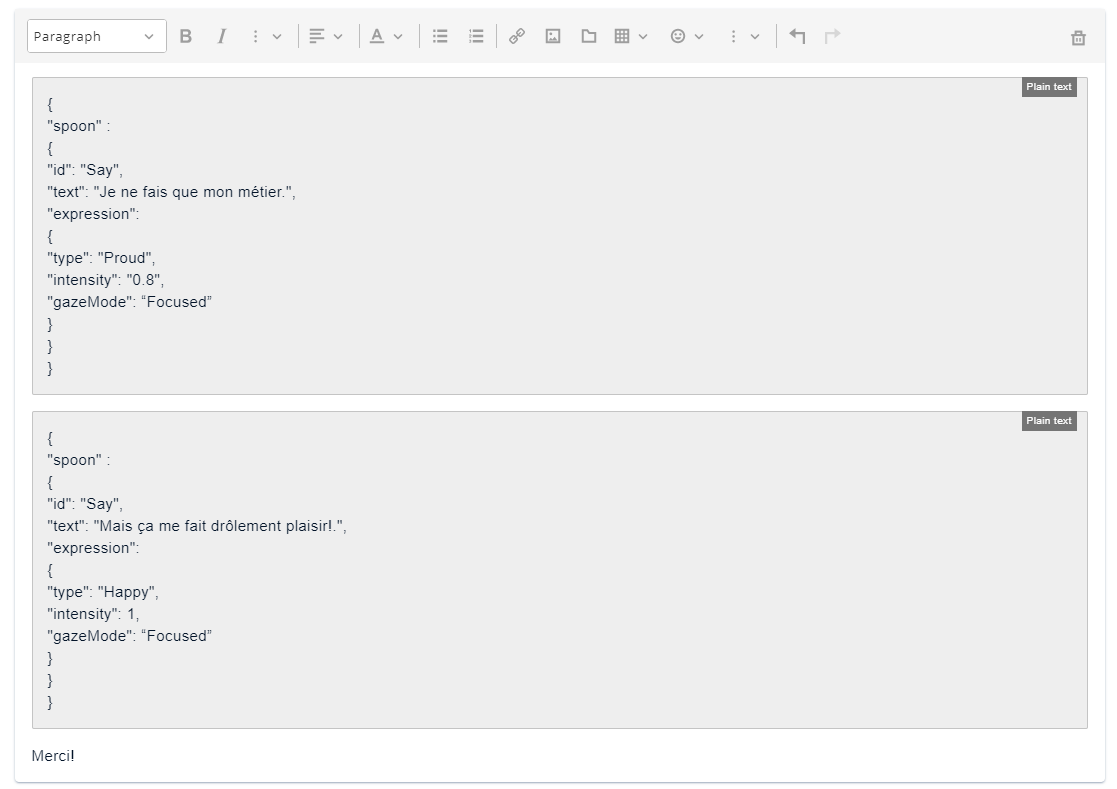

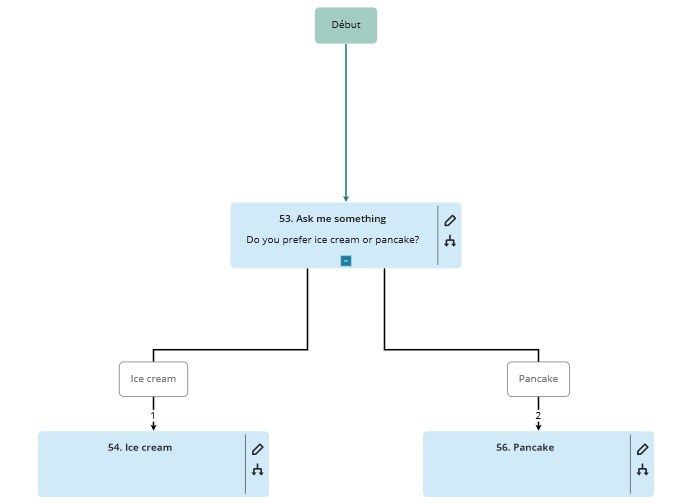

But if you want to make better use of SPooN's capabilities (make SPooNy smile, multi-choice question,...), you should use SPooN's custom payloads. In the rich text editor, choose the format Code block from the editor (using the default Plain Text is fine).

TIP

You can use multiple answers (by clicking the blue + button next to the answer). They will be played sequentially.

TIP

You can use multiple paragraph or payload by answer box. This will cut the rythm by saying and displaying each paragraph one after the other instead.

Make sure they are in separate blocks. The way to achieve this is by creating a few empty lines before adding a code block and then replacing the ones you want by a code block. It should look something like this.

The payloads are described in SPooN specific message.

# Inbenta specific tips & tricks

# Open Question - Inbenta

If you're capturing some freespeech user input (such as a name for example), use the OpenQuestion payload as described here in your intent.

Let's use the following example:

{ "spoon":

{

"id": "OpenQuestion",

"questionId": "Name",

"question": "What is your name?"

}

}

For this mechanism to work, do the following:

- Create a variable from the Inbenta Variables interface. The name of the variable is normalized:

OpenQuestion_+ thequestionIdthat you chose in the payload (soOpenQuestion_Namein our example). In validation, select the Data type only option as it's freespeech. - Create a new dialog tree that starts at receiving the user's answer. Your first intent (the one connected to

Startin the Inbenta Dialog tree) uses theDIRECT_CALLtriggerOpenQuestion_+ thequestionIdthat you chose in the payload (soOpenQuestion_Namein our example).

Your intent will be triggered when the user answers the open question and you will be able to retrieve the content of their answer in the variable OpenQuestion_ + questionId that you created, using Inbenta syntax for variables VariableName (so OpenQuestion_Name in our example).

# Creating a Speech Fallback mechanism - Inbenta

Check the concept section to understand what the validated speech fallback mechanism is.

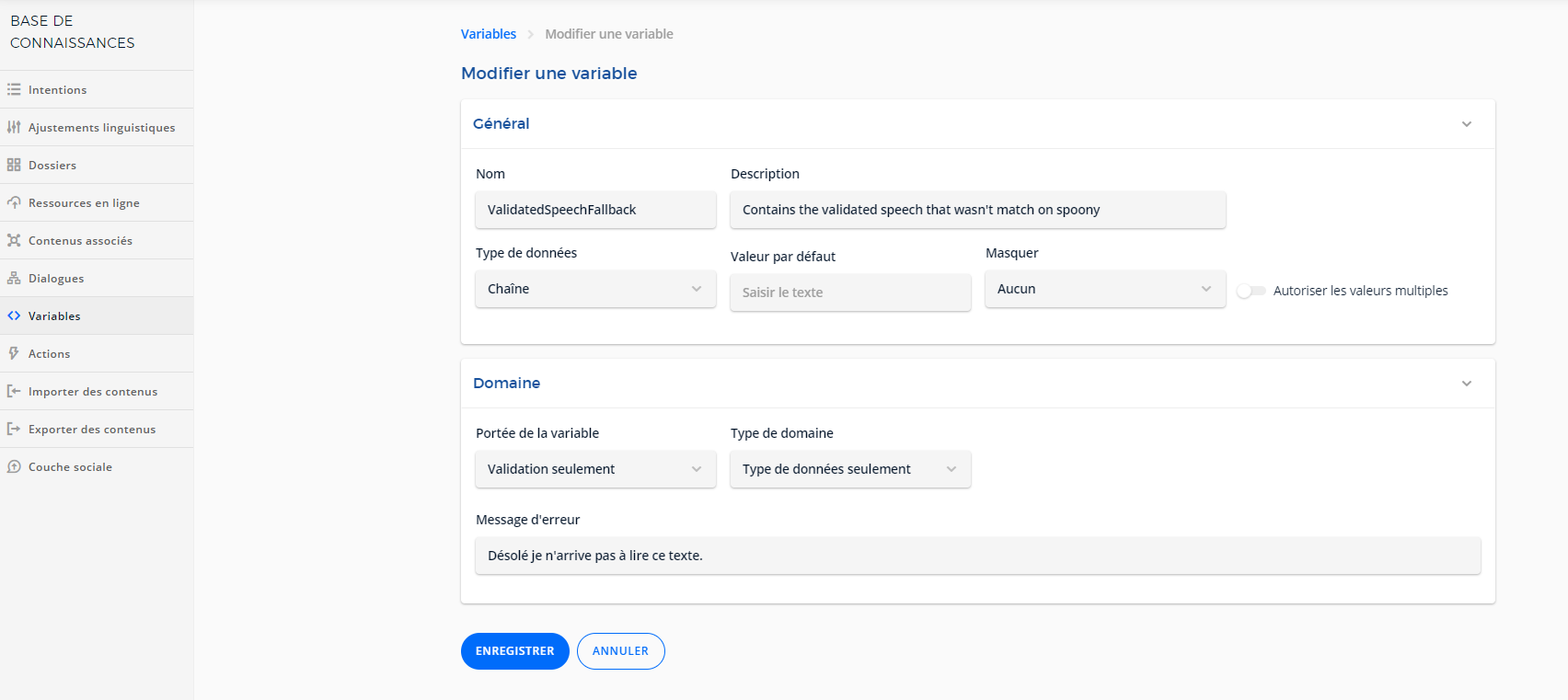

First create a ValidatedSpeechFallback variable in the Inbenta Variables interface. As you can see from the screenshot below, the data type is a string, and you're only looking at validating the data type here as the variable stores freespeech text.

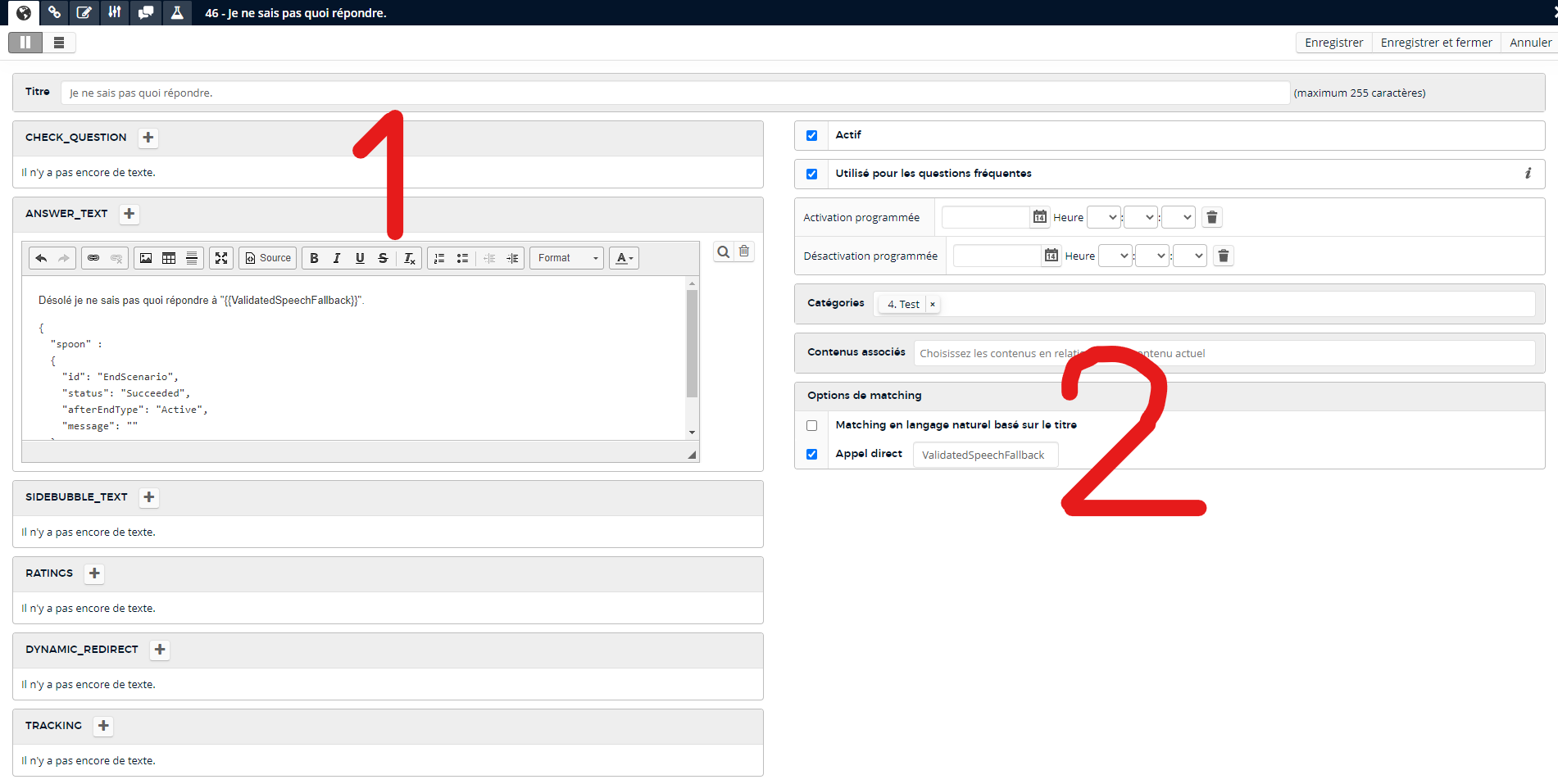

Once you've created the ValidatedSpeechFallback variable and activated it in the release.conf, create a new Intent (or dialog tree) from Inbenta. As displayed below, remember those 2 points:

- The variable

ValidatedSpeechFallbackcontains the validated speech. - Set the direct call to

ValidatedSpeechFallback- it is triggered when the user validates the speech by clicking on it.

# Multiple Choice Question - Inbenta

Basic architecture using Dialog with children intents

This is a very simple way of creating an MCQ (Multiple choice question) in Inbenta. By using the Dialog mode of Inbenta you can give the user a choice, and they will be able to choose using buttons on SPooN's UI.

There are some limitations with this method: it will only display the possible answers as buttons (without images). The text of the button is the text used in the transition in Inbenta.

To set it up in Inbenta, choose the option Dialog when creating a new intention. It will create a tree with one question and two choices - you can use this as a base to add more choices to the MCQ.

- The question is the first intent at the top of the created tree.

- Each children intent represents a potential answer.

You need to use the first transition type: `Display all children nodes at the same time`. This is the default option when creating a new Dialog in Inbenta.

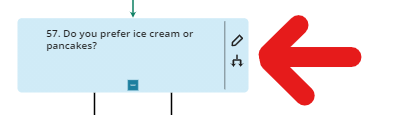

Here is a simple example of what the dialog tree looks like:

Using Spoon MCQ payload

If you want to have more options for the MCQ interaction on the character (for example displaying images for the choices, etc.), you can use the MCQ payload.

For this, you create a Dialog with a question intent and a children intent per answer as before. Then:

- add an MCQ Payload in the response field of the question. You can find the details for the MCQ payload here

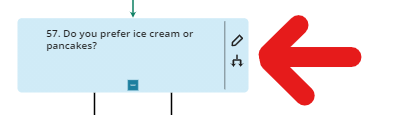

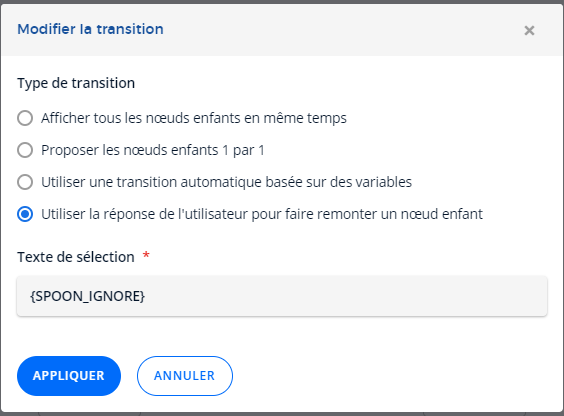

- click on the transition type selection on the question intent as indicated by the red arrow on the screenshot below.

- You can now select the 4th transition type: "use the user's answer to match a child node". Set the transition text to

{SPOON_IGNORE}in the text field as indicated below.

- Fill in the children intents - the potential answers - with the reaction you want SPooNy to have based on which answer was selected.

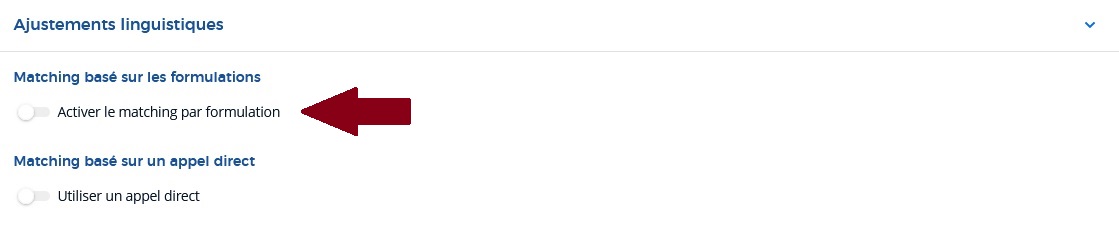

Children nodes behavior based on matching choice

You can choose to disable matching based on formulations so it can only trigger inside the dialog tree, for example if you offer a yes / no answer, you don't want this intent to trigger each time someone says yes in some other part of the conversation. If you leave it enabled, then it's possible to trigger that intent directly without going through the question intent.

# Tock

The Open Conversation Kit - Tock (opens new window) is connected to SPooN's SDK. It is possible to try Tock on their demo website (opens new window), but you can also deploy the Tock platform locally (opens new window).

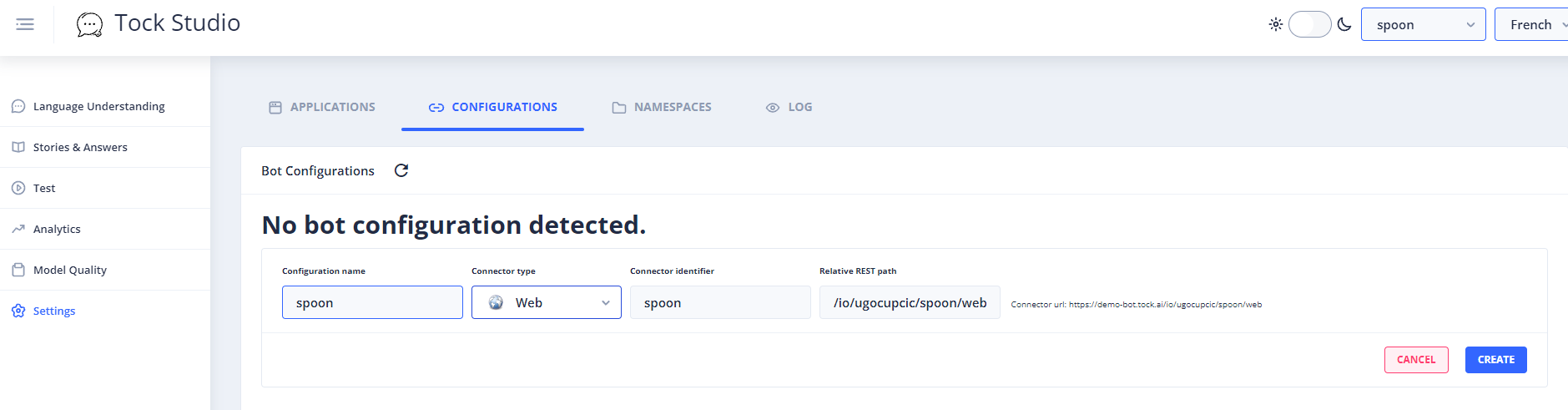

# Connecting Tock to a SPooN release

We use the Web connection type to interface SPooN and Tock. When creating this connection type, you'll be able to get the connector URL to use to interface with your chatbot. You can see how it appears on the screenshot below.

# Link Tock chatbots in the interaction flow

You can connect your chatbots at different steps of the interaction flow. See documentations about the Interaction Flow concepts and the technical details on how to customize your interaction flow using chatbots.

The connection of your chatbot relies on the definition of a ChatbotConfiguration. For Tock chatbots:

"chatbotConfigurations":

{

"engineName": "Tock",

"connectionConfiguration":

{

"url": <string>

}

}

- engineName: set it to "Tock"

- connectionConfiguration: will include the informations to properly connect Spoon software with your Tock Chatbot

- url: is the connector URL you retrieved in the previous step

# How to bind the main entry of a Service with your Tock chatbot

When an interaction starts with a user, the event "Spoon_GenericStart" is sent to your chatbot. You can also customize it in the chatbotConfiguration to use your own event name.

For your chatbot to react to this event, do the following:

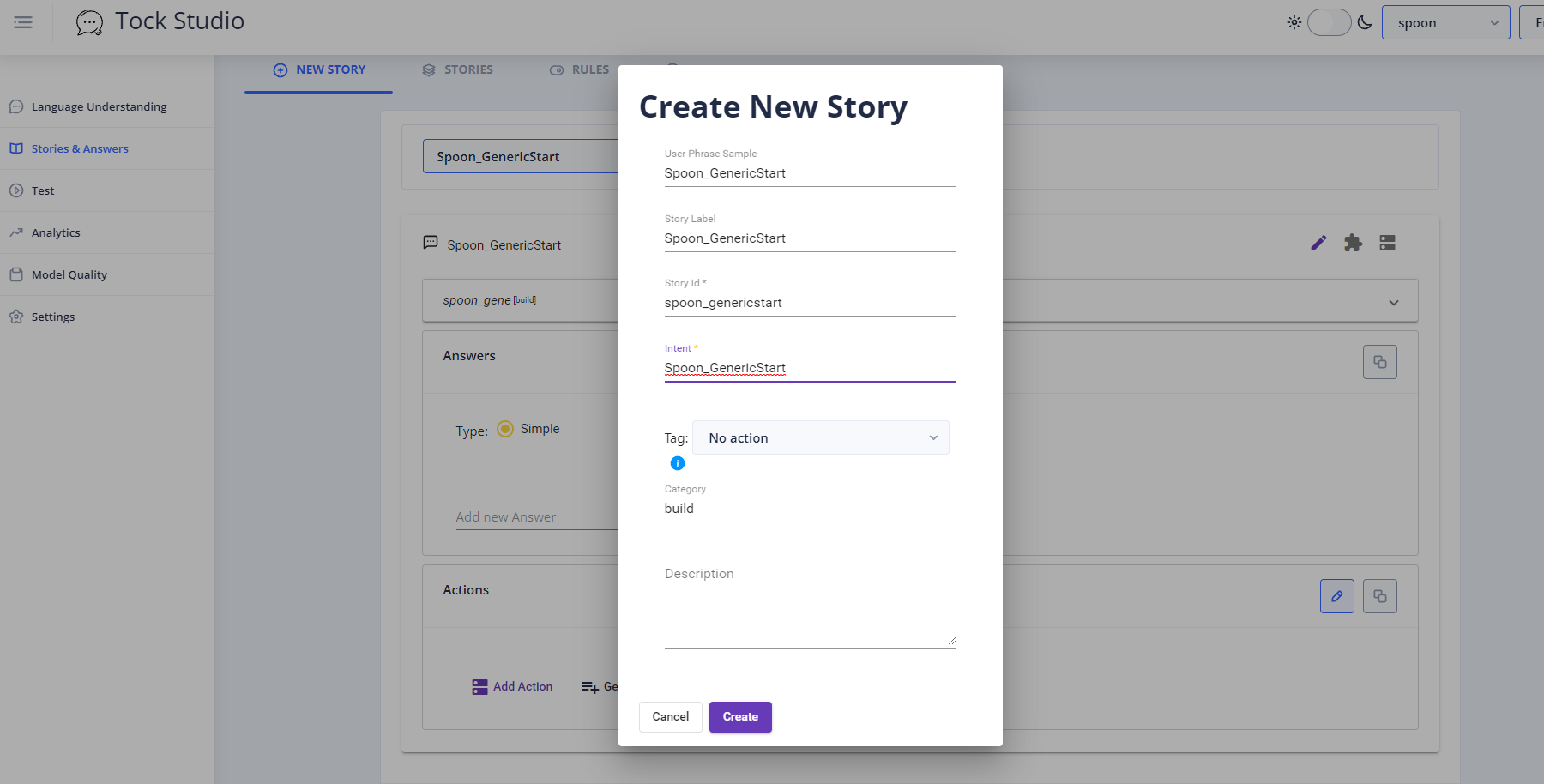

- create a new story called Spoon_GenericStart (or the event trigger you used in your customization).

- directly edit this story by clicking on the pen icon at the right of the name, and use the same name for the story id and intent name as pictured below

- Add the response you want to the Answers in your story. This is what SPooNy will do when the scenario is triggered.

# How to send custom messages to SPooN

SPooN will read the text of the answer that you wrote in Tock. SPooNy will show the images as well.

But if you want to make better use of SPooN's capabilities (make SPooNy smile, multi-choice question,...), you should use SPooN's custom payloads. The payloads are described in SPooN specific message. Directly write the payloads in the answer field of the story.

TIP

Reading and writing the payloads in the text field of Tock's stories is challenging. We recommend writing them in your favourite editor - using json validation.

# Tock specific tips & tricks

# Open Question - Tock

If you're capturing some freespeech user input (such as a name for example), use the OpenQuestion payload as described here in your intent.

Let's use the following example:

{ "spoon":

{

"id": "OpenQuestion",

"questionId": "Name",

"question": "What is your name?"

}

}

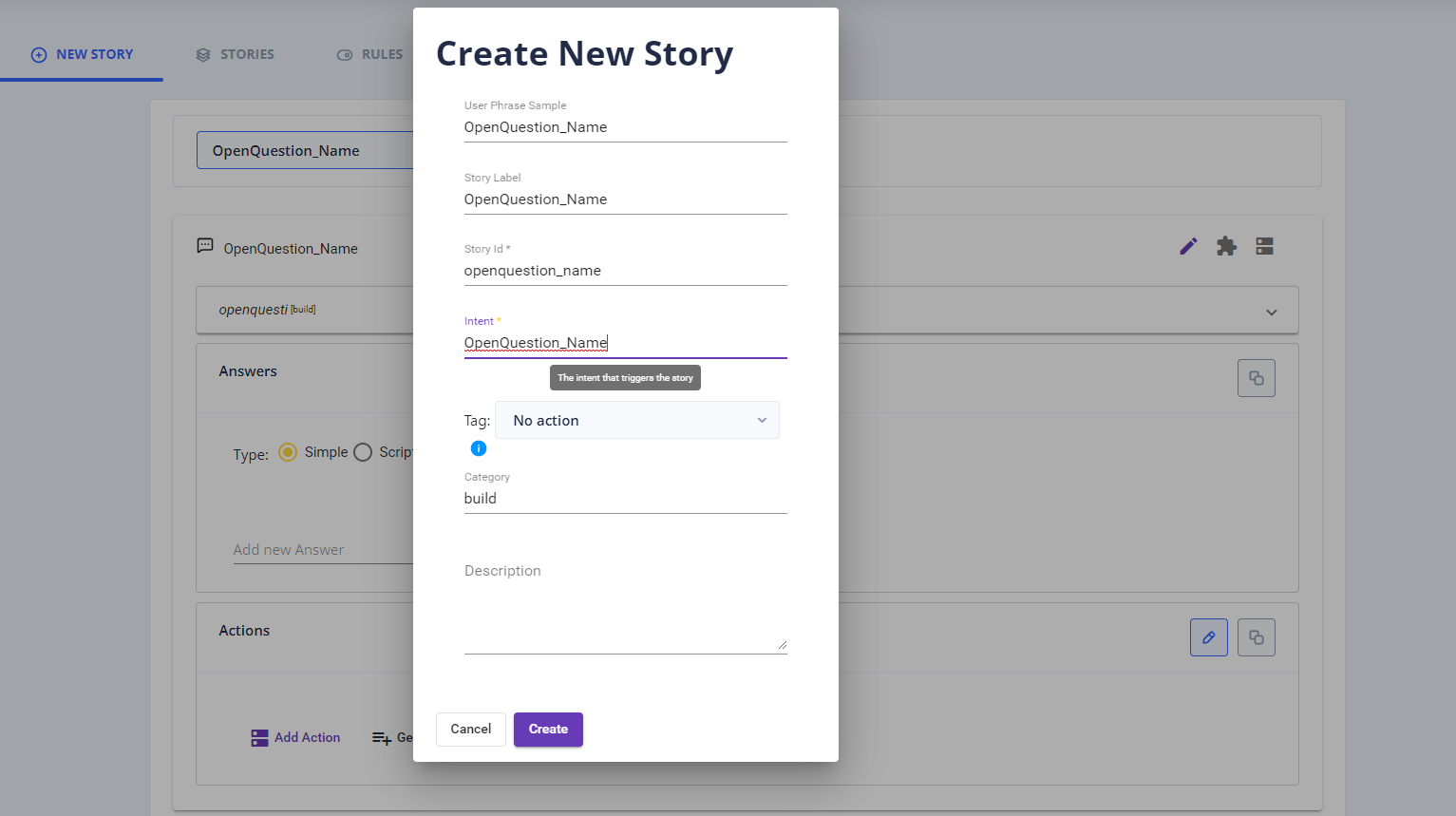

For this mechanism to work, do the following:

- create a new story with the name

OpenQuestion_+ thequestionIdthat you chose in the payload (soOpenQuestion_Namein our example). - directly edit this story by clicking on the pen icon at the right of the name, and use the same name for the story id and intent name as pictured below

- in the Answers of the story, select the type Script and modify the following code to do what you want with the user's response.

import ai.tock.bot.definition.story

import ai.tock.bot.definition.ParameterKey

class OpenQuestionAnswer: ParameterKey {

override val name: String = "openquestion_answer"

}

val s = story("OpenQuestion_Name") {

val param = OpenQuestionAnswer()

val ent = this.choice(param)

end("Nice to meet you " + ent?.toString() + "!")

}

Your intent is triggered when the user answers the open question and you are able to retrieve the content of their answer with the code above.

# Creating a Speech Fallback mechanism - Tock

Check the concept section to understand what the validated speech fallback mechanism is.

Here is how to use the Validated Speech Fallback mechanism in Tock:

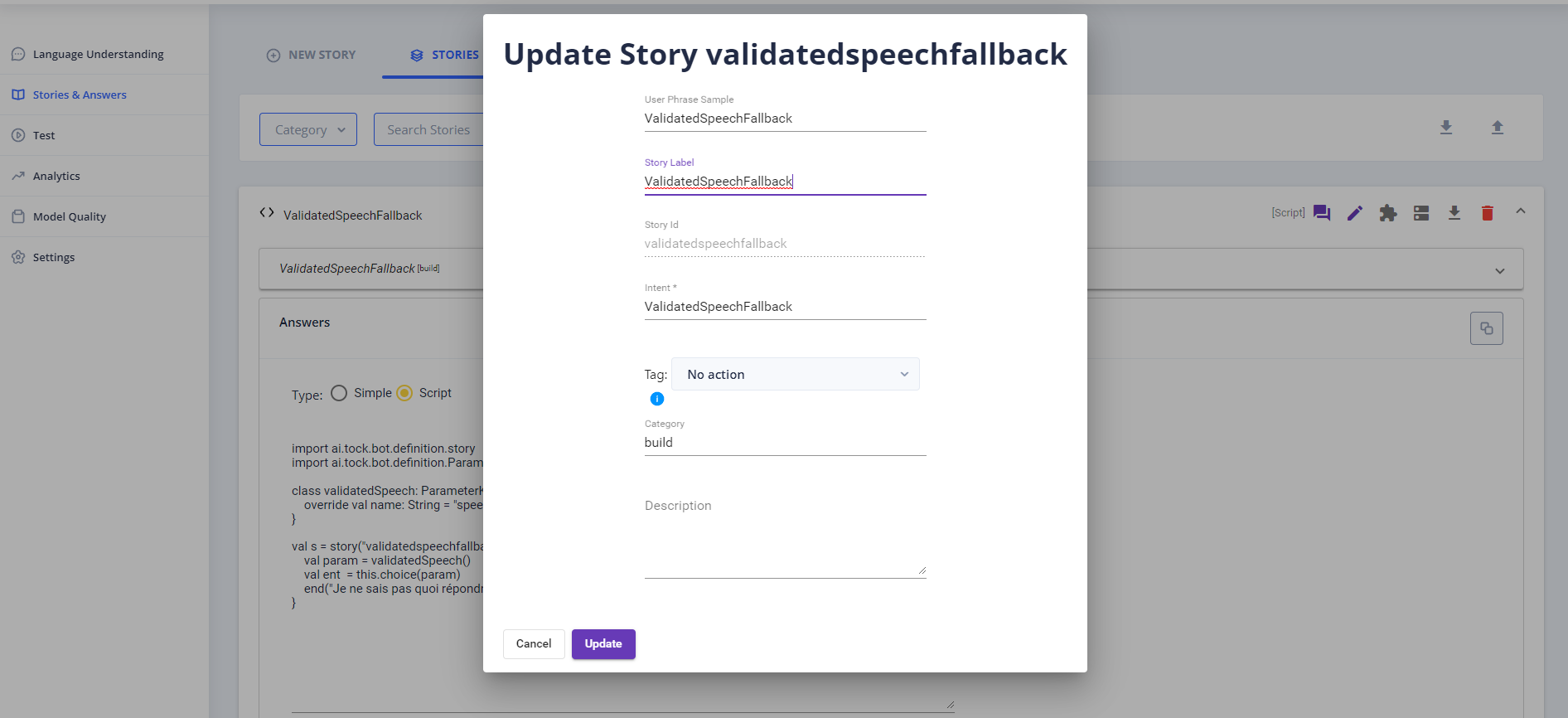

- activate the validated speech fallback mechanism it in the

release.conf - create a new story with the name

ValidatedSpeechFallback - directly edit this story by clicking on the pen icon at the right of the name, and use the same name for the story id and intent name as pictured below

- in the Answers of the story, select the type Script and modify the following code to do what you want with the user's response.

import ai.tock.bot.definition.story

import ai.tock.bot.definition.ParameterKey

class validatedSpeech: ParameterKey {

override val name: String = "speech"

}

val s = story("validatedspeechfallback") {

val param = validatedSpeech()

val ent = this.choice(param)

end("Je ne sais pas quoi répondre à: " + ent?.toString())

}

# Multiple Choice Question - Tock

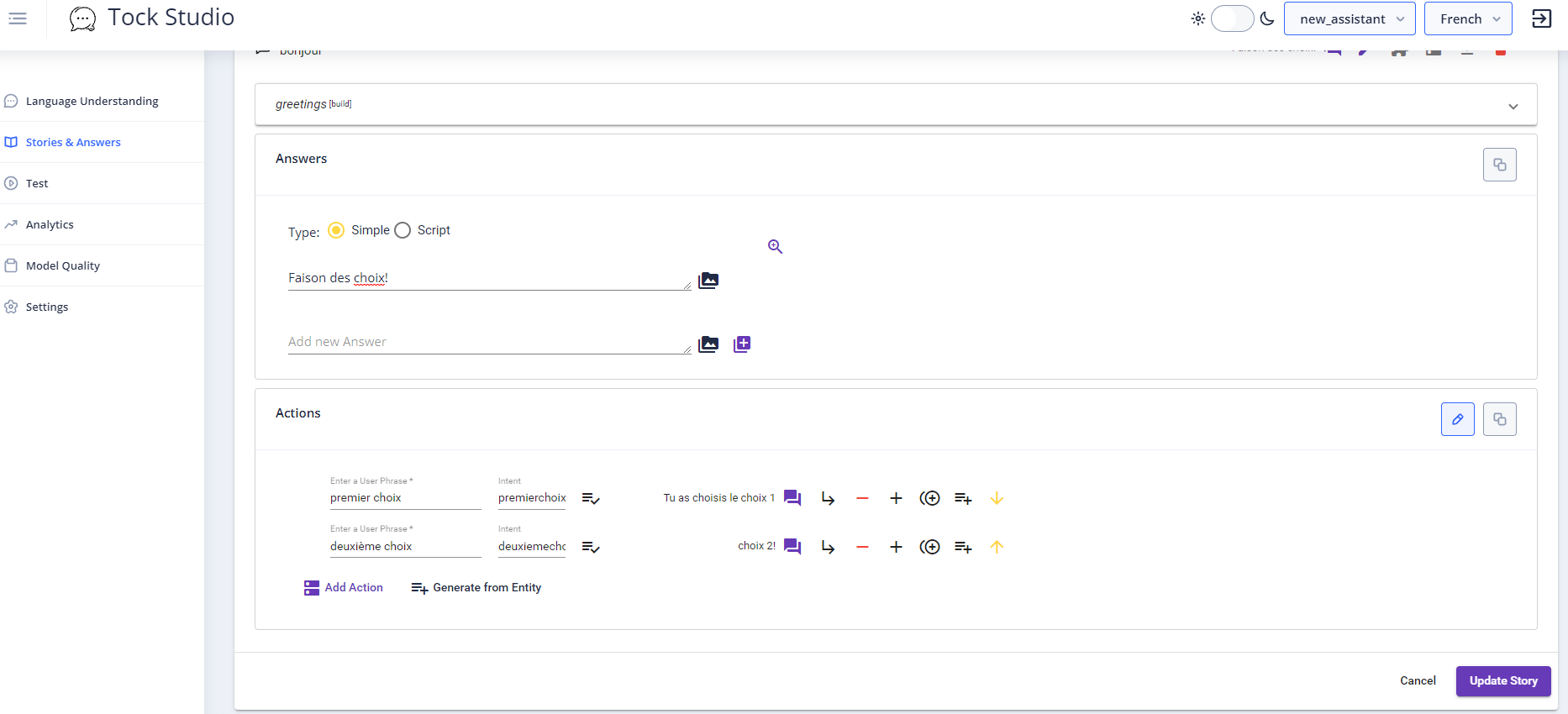

By using the Actions in a Tock Story, you can give the user a choice, and they will be able to choose using buttons on SPooN's UI.

To set it up in Tock, fill the choices in the actions part of the response in your story.

Here is a simple example of what the actions look like:

# SPooN specific messages

# List of Skills

Action description: You can combine different skills in a list to have them played randomly or sequentially.

Message description:

{

"spoon" :

{

"id": "List",

"operator": <TypeEnum>,

"skills": [

...

]

}

- id: ID of the Action ⇒ List

- operator: which operator should be used to play the list.

- Possible values:

- "Random": one of the skills in the list will be played at random by SPooN

- "Sequential": all the skills in the list will be played one after the other.

- Default value (when field not set): "Sequential"

- Possible values:

- skills: a list of skills as json payloads. You can even include a payload for another list inside this list.

Here is a short example of a list of two skills played randomly - it will show one of the two images in the list at random:

{

"spoon" :

{

"id": "List",

"operator": "Random",

"skills": [

{

"id": "DisplayImage",

"imagePath": "https://docs.spoon.ai/static/docs/img/HOMME-4.png"

},

{

"id": "DisplayImage",

"imagePath": "https://docs.spoon.ai/static/docs/img/HOMME-5.png"

}

]

}

}

# Say (basic, without expression)

Action description: Spoony says something

Message description:

- Message type: Text Response

- Content : “textToSay”

# Expressive Say (Say with expression)

Action description: Spoony says something while expressing a chosen expression

Message description:

{

"spoon" :

{

"id": "Say",

"text": <string>,

"expression":

{

"type": <TypeEnum>,

"intensity": <float>,

"gazeMode": <GazeModeEnum>

},

"bang": <bool>

}

}

- id: ID of the Action ⇒ “Say”

- text: text to say

- expression: expression to be expressed by Spoony while saying the text

- type: type of the expression

- enum among the following list : [“Standard”, “Happy”, “Sad”, “Surprised”, “Scared”, “Curious”, “Proud”, “Mocker”, “Crazy”]

- intensity: intensity of the expression

- float between 0 and 1 (1 corresponding to the maximum intensity of the expression)

- gazeMode: corresponds to the expected behavior of the gaze (eyes) of Spoony during the expressing

- enum among the following list

- “Focused” : Spoony will keep looking at the user during the whole expression

- “Distracted” : Spoony might not look at the user during the whole expression and look away (but it will look back at the user at the end of the expression)

- enum among the following list

- type: type of the expression

- bang: the character starts his expression with an expression reaction (without sound): the bang. It is then followed by a less pronounced animation in a loop.

- default value: true, will play the bang before the looped animation.

- when set to false, the bang will not be played, only the looped animation

# Expressive Reaction

Action description: Spoony will express a short expressive reaction Message description:

{

"spoon" :

{

"id": "ExpressiveReaction",

"expression":

{

"type": <TypeEnum>,

"intensity": <float>,

"gazeMode": <GazeModeEnum>

}

}

}

- id: ID of the Action ⇒ “ExpressiveReaction”

- expression: expression to be expressed by Spoony while saying the text

- type: type of the expression

- enum among the following list : [“Standard”, “Happy”, “Sad”, “Surprised”, “Scared”, “Curious”, “Proud”, “Mocker”, “Crazy”]

- intensity: intensity of the expression

- float between 0 and 1 (1 corresponding to the maximum intensity of the expression)

- gazeMode: corresponds to the expected behavior of the gaze (eyes) of Spoony during the expressing

- enum among the following list

- “Focused” : Spoony will keep looking at the user during the whole expression

- “Distracted” : Spoony might not look at the user during the whole expression and look away (but it will look back at the user at the end of the expression)

- enum among the following list

- type: type of the expression

# Expressive State

Action description: Spoony will stay in a specific expressive state until told otherwise Message description:

{

"spoon" :

{

"id": "ExpressiveState",

"mood":

{

"type": <TypeEnum>

}

}

}

- id: ID of the Action ⇒ “ExpressiveState”

- mood: mood to be expressed by Spoony

- type: type of the mood

- enum among the following list : [“Standard”, “Happy”, “Sad”, “Grumpy”]

- “Standard” : Spoony will be in its usual state, not expressing any particular mood

- type: type of the mood

# Multiple Choice Questions

Action description: Spoony asks a question and suggests a list of expected responses (displayed on the screen as interactive buttons)

Message description:

{

"spoon" :

{

"id": "MCQ",

"question": <string>,

"visualAnswers": <bool>,

"visualTemplate":

{

"type": <VisualTemplateTypeEnum>,

"buttonLayout":

{

"type": <ButtonLayoutTypeEnum>

},

"font":

{

"color": <string>,

},

},

"answers": [

{

"displayText": <string>,

"content": <string>,

"imageUrl": <string>,

"font": {

"color": <string>,

}

},

{...}

]

}

}

- id: ID of the Action ⇒ “MCQ”

- question: question that will be asked by Spoony.

- visualAnswers: set to true if you want to display the MCQ as a menu with images, false to display only the triggers.

- visualTemplate: choose a template for the UI with the different elements, displayed as buttons with image and text. Not needed if visualAnswers is set to

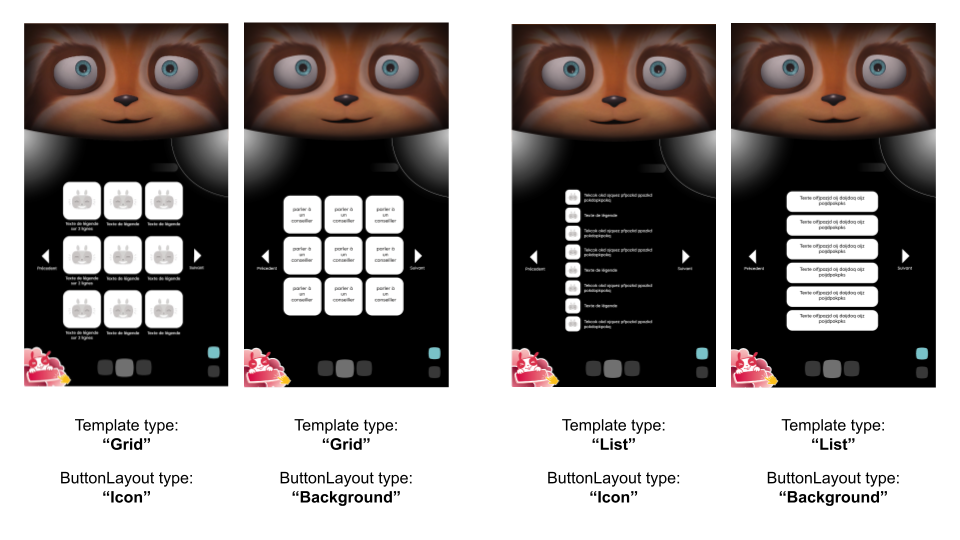

False. - type: type of the template.

- Possible values:

- "Grid": the elements are displayed as squares buttons in a grid, with multiple rows and multiple elements per row.

- "List": the elements are displayed as rectangular buttons in a vertical list, with only one element per line.

- Default value (when field not set): "Grid".

- Possible values:

- buttonLayout: define the layout of the buttons.

- type: type of the button layout.

- Possible values:

- "Icon": the text of the button is displayed next to the image.

- "Background": the text of the button is displayed on top of the image, used as a background.

- Default value (when field not set):

- "Icon" for "Grid" template type.

- "Background" for "List" template type.

- Possible values:

- type: type of the button layout.

- font: define the font of the buttons.

- color: color of the font.

- Format: hexadecimal (ex:

#ffffffff(white)). - Default value (when field not set):

#000000ff(the 0s are for the color black, thefffor the alpha value.

- Format: hexadecimal (ex:

- color: color of the font.

- answers: list of expected answers

- for each answer

- displayText: text displayed in the corresponding interactive button, that can trigger the answer.

- content: simulated input to the chatbot when answer is selected (by voice or by button validation).

- imageUrl: url of the image to be displayed with the answer. Not needed if you only want to display the triggers.

- font: specific font of the button, overrides the global font of the VisualTemplate (set in the "font" field of the "visualTemplate" field, defined under MultipleChoiceQuestions) if not null.

- color: color of the font.

- Format: hexadecimal (ex:

#ffffffff(white)). - Default value (when field not set):

#000000ff(the 0s are for the color black, thefffor the alpha value.

- for each answer

To guarantee the best quality of the your visual template display, follow these recommendations for image resolutions, based on the chosen template configuration:

- For "Grid" templates, use square images with a resolution of 200px x 200px.

- For "List" templates

- with a "Icon" button layout, use square images with a resolution of 100px x 100px.

- with a "Background" button layout, use rectangular images with a resolution of 480px x 108px.

WARNING

The MCQ must be the last payload you send for a given intent. If you use an other message or payload after that, we can't guarantee that you will trigger the response event.

# Open Question

Action description: Spoony asks a question and waits for a freespeech input. The user validates the input by clicking on the text displayed by SPooNy.

Message description:

{ "spoon" :

{

"id": "OpenQuestion",

"questionId": <string>,

"question": <string>,

"keyboardType": <string>

}

}

- id: ID of the Action ⇒ "OpenQuestion"

- questionId: ID of the question, to use in order to link a reaction to the answer and retrieve its content.

- question: question that will be asked by Spoony

- keyboardType: type of the keyboard to be displayed to help the user to answer

- by default this value is set to null and no keyboard is displayed

- possible values:

- null: no keyboard is displayed

- "Text": to enter texts

- "EmailAddress": to enter email adresses (see here how to set custom email suffixes in the keyboard)

- "Number": to enter numbers

WARNING

The OpenQuestion must be the last payload you send for a given intent. If you use an other message or payload after that, we can't guarantee that you will trigger the response event.

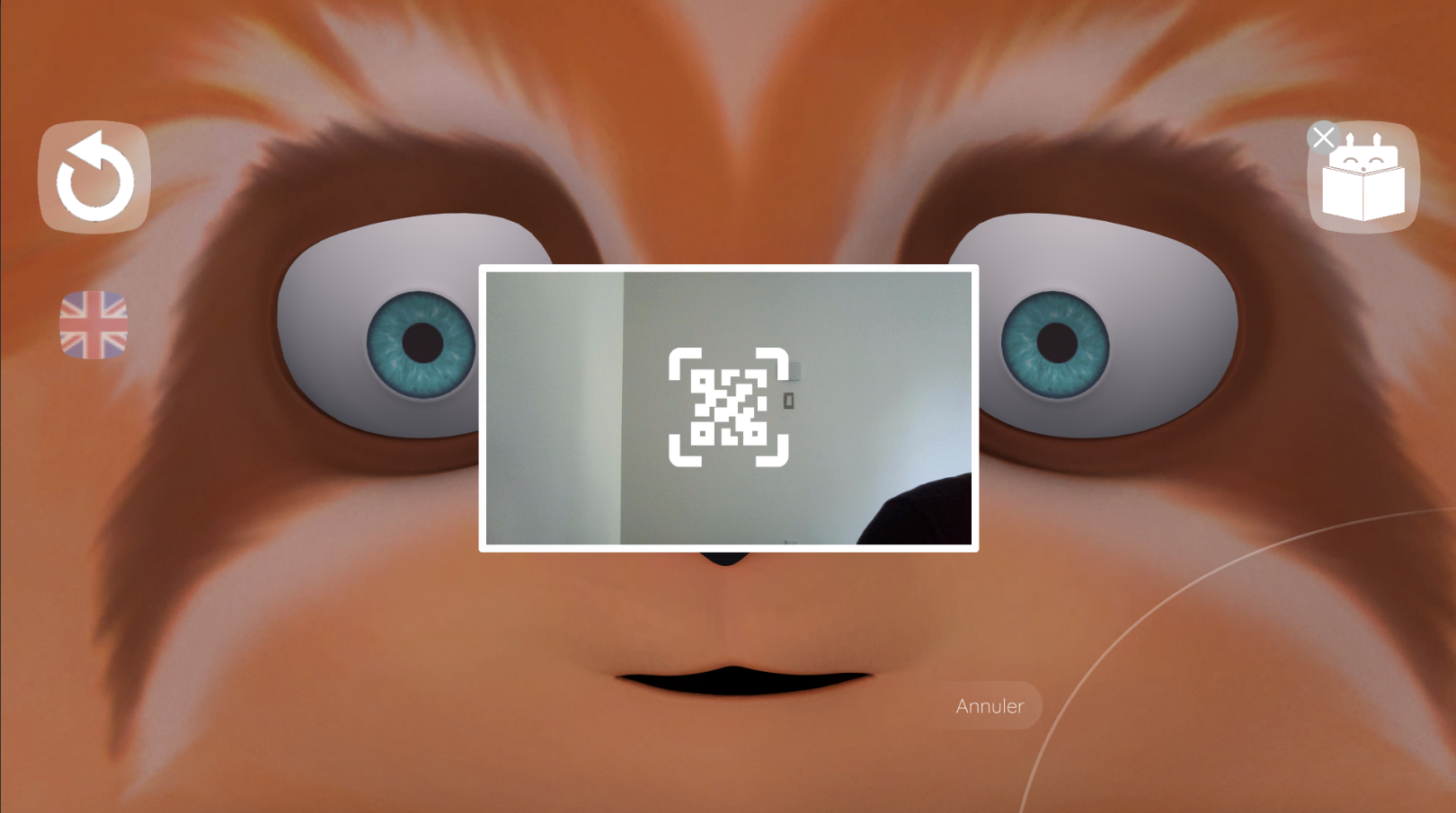

# Start QRCode Scan

Action description: Spoony starts looking for QRCodes and displays a UI to show the user its waiting for a QRCode. Different events are sent when the QRCode is detected or the user cancels the QRCode detection.

Message description:

{ "spoon" :

{

"id": "StartQRCodeScan",

"resultOutput": <string>,

"cancelOutput": <string>

}

}

- id: ID of the Action ⇒ "StartQRCodeScan"

- resultOutput: (optional) the name of the event sent to the chatbot to be used when detecting a QRCode. The default is

QRCodeScan_Result - cancelOutput: (optional) the name of the event sent to the chatbot to be used when the user cancels the detection. The default is

QRCodeScan_Cancel.

Once you trigger your intent using the event (using the same mechanism as described here), the decoded QRCode is available using fulfillments (opens new window) with DialogFlow Inline Editor (opens new window) or your own Webhook service (opens new window).

The decoded QRCode is sent in the outputContext of the request.

Here is a Dialogflow fullfilment in javascript that reads the detected QRCode out loud, if you named your fallback intent QRCodeScan_Result.

'use strict';

const functions = require('firebase-functions');

const {WebhookClient, Payload} = require('dialogflow-fulfillment');

const {Card, Suggestion} = require('dialogflow-fulfillment');

process.env.DEBUG = 'dialogflow:debug'; // enables lib debugging statements

exports.dialogflowFirebaseFulfillment = functions.https.onRequest((request, response) => {

const agent = new WebhookClient({ request, response });

console.log('Dialogflow Request headers: ' + JSON.stringify(request.headers));

console.log('Dialogflow Request original_detect_intent_request: ' + JSON.stringify(request.original_detect_intent_request));

console.log('Dialogflow Request body: ' + JSON.stringify(request.body));

function got_qr(agent) {

var qr_content = "";

for (let i = 0; i < agent.request_.body.queryResult.outputContexts.length; i++) {

if( "detectedQRCode" in agent.request_.body.queryResult.outputContexts[i].parameters){

qr_content = agent.request_.body.queryResult.outputContexts[i].parameters.detectedQRCode;

break;

}

}

if(qr_content == "")

agent.add(`The QRCode seems to be empty!`);

else

agent.add(`Here is the decoded QRCode ${qr_content} !`);

}

// Run the proper function handler based on the matched Dialogflow intent name

let intentMap = new Map();

intentMap.set('QRCodeScan_Result', got_qr);

agent.handleRequest(intentMap);

});

# Display Image

Action description: Spoony will display an image on the screen (it can also display a Gif, based on the extension of the image url)

Message description:

{

"spoon" :

{

"id": "DisplayImage",

"imagePath": <string>,

"caption": <string>,

"duration": <float>,

"clearImage": <bool>

}

}

- id: ID of the Action ⇒ “DisplayImage”

- imagePath: url of the image

- compatible extensions : png, jpg, gif

- caption: (optional) caption of the image

- duration: (optional) how long to display the image for in seconds

- if the duration is less or equal to zero, then the image is displayed until the next user's input (the default value is 0), or until HideImage is called.

- clearImage: (optional) if set to true, the image is displayed without the white background. False (uses a white background around your image) by default.

# Hide Image

Action description: Spoony will hide the displayed image.

Message description:

{

"spoon" :

{

"id": "HideImage"

}

}

- id: ID of the Action ⇒ HideImage.

# Display Video

Action description: Spoony will display a video on the screen. The video will close on its own at its end, or can be cancelled by the user.

Message description:

{

"spoon" :

{

"id": "DisplayVideo",

"videoPath": <string>,

"sourceFolder": <float>

}

}

- id: ID of the Action ⇒ DisplayVideo

- videoPath: url of the video

- compatible with most video types (opens new window)

- sourceFolder: use "WEB".

At the end of the video you can get two different events. Simply use those events as triggers to your intents in your chatbot.

- Spoon_DisplayVideo_VideoViewerID_End if the user watched the whole video

- Spoon_DisplayVideo_VideoViewerID_Cancel if the user canceled the video while it was playing

WARNING

In this version the streaming websites such as youtube or vimeo ar not yet supported. The API takes a direct link to a video file.

# Volume Control

Action description: Adjust SPooNy's volume programmatically.

Message description:

{

"spoon" :

{

"id": "VolumeControl",

"setVolume": <float>,

"changeVolume": <float>

}

}

- id: ID of the Action ⇒ VolumeControl

- setVolume: (optional) for setting the volume directly to an absolute value (between 0f and 1f).

- changeVolume: (optional) for raising or lowering the volume relative to the current sound volume (value between -2f and 2f).

TIP

The volume is automatically (and silently) capped between the values set in the release.conf as described here

# Change Language

Action description: Spoony will change its interaction language

Message description:

{

"spoon" :

{

"id": "Language",

"language": <LanguageEnum>

}

}

- id: ID of the Action ⇒ “Language”

- language: target language

- enum among the following list [ “en_US”, “fr_FR”, “ja_JP”, “zh_CN”]

# Wait

Action description: Spoony will wait for the given amount of time.

Message description:

{

"spoon":

{

"id": "Wait",

"timeToWait": <float>

}

}

- id: ID of the Action ⇒ “Wait”

- timeToWait: the time to wait for, in seconds

# SendChatbotEvent

Action description: Sends a chatbot event for calling an intent directly using Dialogflow's events.

Message description:

{

"spoon":

{

"id": "SendChatbotEvent",

"timeToWait": <float>

}

}

- id: ID of the Action ⇒ SendChatbotEvent

- eventName: the name of the event, must match the event name in Dialogflow.

# EndScenario

In your chatbot, when you think the service has been delivered, it is important that you declare that your service has ended.

If you do not declare this end, the user will not be able to do anything else with Spoony until the timeout of the chatbot is reached.

Action description: Spoony will end the current scenario (see explanation here)

Message description:

{

"spoon" :

{

"id": "EndScenario",

"status": <StatusEnum>,

"afterEndType": <EndTypeEnum>,

"message": <string>

}

}

- id: ID of the Action ⇒ “EndScenario”

- status: The status of the ending, i.e how the scenario ended

- Possible values:

- "Succeeded": the scenario finished correctly, the user got what they wanted

- "Failed": the scenario did not finished as expected, the user did not get what they wanted

- “CancelledByUser”: the scenario stopped because the user decided to stop it

- "Error": something went wrong

- Possible values:

- afterEndType: behavior after scenario end. When a scenario has ended, the character can have different behaviours based on the value of this field

- Possible values:

- "Passive": the character does not do anything proactively after the scenario end

- Active: the character asks the user if we wants to do something else (and starts the launcher if the user says “yes”)

- Possible values:

- message: scenario end message

- Stores additional information about the scenario end (can be used to have more precise statistics in SpoonAnalytics for example)